Blog Archive for / 2008 /

Managing Threads with a Vector

Wednesday, 10 December 2008

One of the nice things about C++0x is the support for move

semantics that comes from the new Rvalue

Reference language feature. Since this is a language feature, it

means that we can easily have types that are movable but not

copyable without resorting to std::auto_ptr-like

hackery. One such type is the new std::thread class. A

thread of execution can only be associated with one

std::thread object at a time, so std::thread

is not copyable, but it is movable — this

allows you to transfer ownership of a thread of execution between

objects, and return std::thread objects from

functions. The important point for today's blog post is that it allows

you to store std::thread objects in containers.

Move-aware containers

The C++0x standard containers are required to be move-aware, and

move objects rather than copy them when changing

their position within the container. For existing copyable types that

don't have a specific move constructor or move-assignment

operator that takes an rvalue reference this amounts to the same

thing — when a std::vector is resized, or an

element is inserted in the middle, the elements will be copied to

their new locations. The important difference is that you can now

store types that only have a move-constructor and

move-assignment operator in the standard containers because the

objects are moved rather than copied.

This means that you can now write code like:

std::vector<std::thread> v; v.push_back(std::thread(some_function));

and it all "just works". This is good news for managing multiple

threads where the number of threads is not known until run-time

— if you're tuning the number of threads to the number of

processors, using

std::thread::hardware_concurrency() for example. It also

means that you can then use the

std::vector<std::thread> with the standard library

algorithms such as std::for_each:

void do_join(std::thread& t)

{

t.join();

}

void join_all(std::vector<std::thread>& v)

{

std::for_each(v.begin(),v.end(),do_join);

}

If you need an extra thread because one of your threads is blocked

waiting for something, you can just use insert() or

push_back() to add a new thread to the

vector. Of course you can also just move threads into or

out of the vector by indexing the elements directly:

std::vector<std::thread> v(std::thread::hardware_concurrency());

for(unsigned i=0;i<v.size();++i)

{

v[i]=std::thread(do_work);

}

In fact, many of the examples in my

book use std::vector<std::thread> for managing

the threads, as it's the simplest way to do it.

Other containers work too

It's not just std::vector that's required to be

move-aware — all the other standard containers are too. This

means you can have a std::list<std::thread>, or a

std::deque<std::thread>, or even a

std::map<int,std::thread>. In fact, the whole C++0x

standard library is designed to work with move-only types such as

std::thread.

Try it out today

Wouldn't it be nice if you could try it out today, and get used to

using containers of std::thread objects without having to

wait for a C++0x compiler? Well, you can — the

0.6 beta of the just::thread C++0x

Thread Library released last Friday provides a specialization of

std::vector<std::thread> so that you can write code

like in these examples and it will work with Microsoft Visual Studio

2008. Sign up at the just::thread

Support Forum to download it today.

Posted by Anthony Williams

[/ threading /] permanent link

Tags: threads, C++0x, vector, multithreading, concurrency

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Peterson's lock with C++0x atomics

Friday, 05 December 2008

Bartosz Milewski shows an implementation of Peterson's locking algorithm in his latest post on C++ atomics and memory ordering. Dmitriy V'jukov posted an alternative implementation in the comments. Also in the comments, Bartosz says:

"So even though I don't have a formal proof, I believe my implementation of Peterson lock is correct. For all I know, Dmitriy's implementation might also be correct, but it's much harder to prove."

I'd like to offer an analysis of both algorithms to see if they are correct, below. However, before we start I'd also like to highlight a comment that Bartosz made in his conclusion:

"Any time you deviate from sequential consistency, you increase the complexity of the problem by orders of magnitude."

This is something I wholeheartedly agree with. If you weren't

convinced by my previous post on Memory

Models and Synchronization, maybe the proof below will convince

you to stick to memory_order_seq_cst (the default) unless

you really need to do otherwise.

C++0x memory ordering recap

In C++0x, we have to think about things in terms of the happens-before and synchronizes-with relationships described in the Standard — it's no good saying "it works on my CPU" because different CPUs have different default ordering constraints on basic operations such as load and store. In brief, those relationships are:

- Synchronizes-with

- An operation A synchronizes-with an

operation B if A is a store to some

atomic variable

m, with an ordering ofstd::memory_order_release, orstd::memory_order_seq_cst, B is a load from the same variablem, with an ordering ofstd::memory_order_acquireorstd::memory_order_seq_cst, and B reads the value stored by A. - Happens-before

- An operation A happens-before an

operation B if:

- A is performed on the same thread as B, and A is before B in program order, or

- A synchronizes-with B, or

- A happens-before some other operation C, and C happens-before B.

std::memory_order_consume, but this is enough for now.

If all your operations use std::memory_order_seq_cst,

then there is the additional constraint of total ordering, as I

mentioned before, but neither of the implementations in question use

any std::memory_order_seq_cst operations, so we

can leave that aside for now.

Now, let's look at the implementations.

Bartosz's implementation

I've extracted the code for Bartosz's implementation from his posts, and it is shown below:

class Peterson_Bartosz

{

private:

// indexed by thread ID, 0 or 1

std::atomic<bool> _interested[2];

// who's yielding priority?

std::atomic<int> _victim;

public:

Peterson_Bartosz()

{

_victim.store(0, std::memory_order_release);

_interested[0].store(false, std::memory_order_release);

_interested[1].store(false, std::memory_order_release);

}

void lock()

{

int me = threadID; // either 0 or 1

int he = 1 ? me; // the other thread

_interested[me].exchange(true, std::memory_order_acq_rel);

_victim.store(me, std::memory_order_release);

while (_interested[he].load(std::memory_order_acquire)

&& _victim.load(std::memory_order_acquire) == me)

continue; // spin

}

void unlock()

{

int me = threadID;

_interested[me].store(false,std::memory_order_release);

}

}

There are three things to prove with Peterson's lock:

- If thread 0 successfully acquires the lock, then thread 1 will not do so;

- If thread 0 acquires the lock and then releases it, then thread 1 will successfully acquire the lock;

- If thread 0 fails to acquire the lock, then thread 1 does so.

Let's look at each in turn.

If thread 0 successfully acquires the lock, then thread 1 will not do so

Initially _victim is 0, and the

_interested variables are both false. The

call to lock() from thread 0 will then set

_interested[0] to true, and

_victim to 0.

The loop then checks _interested[1], which is still

false, so we break out of the loop, and the lock is

acquired.

So, what about thread 1? Thread 1 now comes along and tries to

acquire the lock. It sets _interested[1] to

true, and _victim to 1, and then enters the

while loop. This is where the fun begins.

The first thing we check is _interested[0]. Now, we

know this was set to true in thread 0 as it acquired the

lock, but the important thing is: does the CPU running thread 1 know

that? Is it guaranteed by the memory model?

For it to be guaranteed by the memory model, we have to prove that

the store to _interested[0] from thread 0

happens-before the load from thread 1. This is trivially true

if we read true in thread 1, but that doesn't help: we

need to prove that we can't read false. We

therefore need to find a variable which was stored by thread 0, and

loaded by thread 1, and our search comes up empty:

_interested[1] is loaded by thread 1 as part of the

exchange call, but it is not written by thread 0, and

_victim is written by thread 1 without reading the value

stored by thread 0. Consequently, there is no ordering

guarantee on the read of _interested[0], and thread 1

may also break out of the while loop and acquire

the lock.

This implementation is thus broken. Let's now look at Dmitriy's implementation.

Dmitriy's implementation

Dmitriy posted his implementation in the comments using the syntax for his Relacy Race Detector tool, but it's trivially convertible to C++0x syntax. Here is the C++0x version of his code:

std::atomic<int> flag0(0),flag1(0),turn(0);

void lock(unsigned index)

{

if (0 == index)

{

flag0.store(1, std::memory_order_relaxed);

turn.exchange(1, std::memory_order_acq_rel);

while (flag1.load(std::memory_order_acquire)

&& 1 == turn.load(std::memory_order_relaxed))

std::this_thread::yield();

}

else

{

flag1.store(1, std::memory_order_relaxed);

turn.exchange(0, std::memory_order_acq_rel);

while (flag0.load(std::memory_order_acquire)

&& 0 == turn.load(std::memory_order_relaxed))

std::this_thread::yield();

}

}

void unlock(unsigned index)

{

if (0 == index)

{

flag0.store(0, std::memory_order_release);

}

else

{

flag1.store(0, std::memory_order_release);

}

}

So, how does this code fare?

If thread 0 successfully acquires the lock, then thread 1 will not do so

Initially the turn, flag0 and

flag1 variables are all 0. The call to

lock() from thread 0 will then set flag0 to

1, and turn to 1. These variables are essentially

equivalent to the variables in Bartosz's implementation, but

turn is set to 0 when _victim is set to 1,

and vice-versa. That doesn't affect the logic of the code.

The loop then checks flag1, which is still 0, so we

break out of the loop, and the lock is acquired.

So, what about thread 1? Thread 1 now comes along and tries to

acquire the lock. It sets flag1 to 1, and

turn to 0, and then enters the while

loop. This is where the fun begins.

As before, the first thing we check is flag0. Now, we

know this was set to 1 in thread 0 as it acquired the lock, but the

important thing is: does the CPU running thread 1 know that? Is it

guaranteed by the memory model?

Again, for it to be guaranteed by the memory model, we have to

prove that the store to flag0 from thread 0

happens-before the load from thread 1. This is trivially true

if we read 1 in thread 1, but that doesn't help: we need to prove that

we can't read 0. We therefore need to find a variable which

was stored by thread 0, and loaded by thread 1, as before.

This time our search is successful: turn is set using

an exchange operation, which is a read-modify-write

operation. Since it uses std::memory_order_acq_rel memory

ordering, it is both a load-acquire and a store-release. If the load

part of the exchange reads the value written by thread 0,

we're home dry: turn is stored with a similar

exchange operation with

std::memory_order_acq_rel in thread 0, so the store from

thread 0 synchronizes-with the load from thread 1.

This means that the store to flag0 from thread 0

happens-before the exchange on turn

in thread 1, and thus happens-before the load in

the while loop. The load in the

while loop thus reads 1 from flag0, and

proceeds to check turn.

Now, since the store to turn from thread 0

happens-before the store from thread 1 (we're relying on that

for the happens-before relationship on flag0,

remember), we know that the value to be read will be the value we

stored in thread 1: 0. Consequently, we keep looping.

OK, so if the store to turn in thread 1 reads the

value stored by thread 0 then thread 1 will stay out of the lock, but

what if it doesn't read the value store by thread 0? In this

case, we know that the exchange call from thread 0 must

have seen the value written by the exchange in thread 1

(writes to a single atomic variable always become visible in the same

order for all threads), which means that the write to

flag1 from thread 1 happens-before the read in

thread 0 and so thread 0 cannot have acquired the lock. Since this was

our initial assumption (thread 0 has acquired the lock), we're home

dry — thread 1 can only acquire the lock if thread 0 didn't.

If thread 0 acquires the lock and then releases it, then thread 1 will successfully acquire the lock

OK, so we've got as far as thread 0 acquiring the lock and thread 1

waiting. What happens if thread 0 now releases the lock? It does this

simply by writing 0 to flag0. The while loop

in thread 1 checks flag0 every time round, and breaks out

if the value read is 0. Therefore, thread 1 will eventually acquire

the mutex. Of course, there is no guarantee when it will

acquire the mutex — it might take arbitrarily long for the the

write to flag0 to make its way to thread 1, but it will

get there in the end. Since flag0 is never written by

thread 1, it doesn't matter whether thread 0 has already released the

lock when thread 1 starts waiting, or whether thread 1 is already

waiting — the while loop will still terminate, and

thread 1 will acquire the lock in both cases.

That just leaves our final check.

If thread 0 fails to acquire the lock, then thread 1 does so

We've essentially already covered this when we checked that thread

1 doesn't acquire the lock if thread 0 does, but this time we're going

in reverse. If thread 0 doesn't acquire the lock, it is because it

sees flag1 as 1 and turn as 1. Since

flag1 is only written by thread 1, if it is 1 then thread

1 must have at least called lock(). If thread 1 has

called unlock then eventually flag1 will be

read as 0, so thread 0 will acquire the lock. So, let's assume for now

that thread 1 hasn't got that far, so flag1 is still

1. The next check is for turn to be 1. This is the value

written by thread 0. If we read it as 1 then either the write to

turn from thread 1 has not yet become visible to thread

0, or the write happens-before the write by thread 0, so the

write from thread 0 overwrote the old value.

If the write from thread 1 happens-before the write from

thread 0 then thread 1 will eventually see turn as 1

(since the last write is by thread 0), and thus thread 1 will acquire

the lock. On the other hand, if the write to turn from

thread 0 happens-before the write to turn from

thread 1, then thread 0 will eventually see the turn as 0

and acquire the lock. Therefore, for thread 0 to be stuck waiting the

last write to turn must have been by thread 0, which

implies thread 1 will eventually get the lock.

Therefore, Dmitriy's implementation works.

Differences, and conclusion

The key difference between the implementations other than the

naming of the variables is which variable the exchange

operation is applied to. In Bartosz's implementation, the

exchange is applied to _interested[me],

which is only ever written by one thread for a given value of

me. In Dmitriy's implementation, the

exchange is applied to the turn variable,

which is the variable updated by both threads. It therefore acts as a

synchronization point for the threads. This is the key to the whole

algorithm — even though many of the operations in Dmitriy's

implementation use std::memory_order_relaxed, whereas

Bartosz's implementation uses std::memory_order_acquire

and std::memory_order_release everywhere, the single

std::memory_order_acq_rel on the exchange on

the right variable is enough.

I'd like to finish by repeating Bartosz's statement about relaxed memory orderings:

"Any time you deviate from sequential consistency, you increase the complexity of the problem by orders of magnitude."

Posted by Anthony Williams

[/ threading /] permanent link

Tags: concurrency, C++0x, atomics, memory model, synchronization

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Rvalue References and Perfect Forwarding in C++0x

Wednesday, 03 December 2008

One of the new features

in C++0x

is the rvalue reference. Whereas the a "normal" lvalue

reference is declared with a single ampersand &,

an rvalue reference is declared with two

ampersands: &&. The key difference is of course

that an rvalue reference can bind to an rvalue, whereas a

non-const lvalue reference cannot. This is primarily used

to support move semantics for expensive-to-copy objects:

class X

{

std::vector<double> data;

public:

X():

data(100000) // lots of data

{}

X(X const& other): // copy constructor

data(other.data) // duplicate all that data

{}

X(X&& other): // move constructor

data(std::move(other.data)) // move the data: no copies

{}

X& operator=(X const& other) // copy-assignment

{

data=other.data; // copy all the data

return *this;

}

X& operator=(X && other) // move-assignment

{

data=std::move(other.data); // move the data: no copies

return *this;

}

};

X make_x(); // build an X with some data

int main()

{

X x1;

X x2(x1); // copy

X x3(std::move(x1)); // move: x1 no longer has any data

x1=make_x(); // return value is an rvalue, so move rather than copy

}

Though move semantics are powerful, rvalue references offer more than that.

Perfect Forwarding

When you combine rvalue references with function templates you get an interesting interaction: if the type of a function parameter is an rvalue reference to a template type parameter then the type parameter is deduce to be an lvalue reference if an lvalue is passed, and a plain type otherwise. This sounds complicated, so lets look at an example:

template<typename T>

void f(T&& t);

int main()

{

X x;

f(x); // 1

f(X()); // 2

}

The function template f meets our criterion above, so

in the call f(x) at the line marked "1", the template

parameter T is deduced to be X&,

whereas in the line marked "2", the supplied parameter is an rvalue

(because it's a temporary), so T is deduced to

be X.

Why is this useful? Well, it means that a function template can

pass its arguments through to another function whilst retaining the

lvalue/rvalue nature of the function arguments by

using std::forward. This is called "perfect

forwarding", avoids excessive copying, and avoids the template

author having to write multiple overloads for lvalue and rvalue

references. Let's look at an example:

void g(X&& t); // A

void g(X& t); // B

template<typename T>

void f(T&& t)

{

g(std::forward<T>(t));

}

void h(X&& t)

{

g(t);

}

int main()

{

X x;

f(x); // 1

f(X()); // 2

h(x);

h(X()); // 3

}

This time our function f forwards its argument to a

function g which is overloaded for lvalue and rvalue

references to an X object. g will

therefore accept lvalues and rvalues alike, but overload resolution

will bind to a different function in each case.

At line "1", we pass a named X object

to f, so T is deduced to be an lvalue

reference: X&, as we saw above. When T

is an lvalue reference, std::forward<T> is a

no-op: it just returns its argument. We therefore call the overload

of g that takes an lvalue reference (line B).

At line "2", we pass a temporary to f,

so T is just plain X. In this

case, std::forward<T>(t) is equivalent

to static_cast<T&&>(t): it ensures that

the argument is forwarded as an rvalue reference. This means that

the overload of g that takes an rvalue reference is

selected (line A).

This is called perfect forwarding because the same

overload of g is selected as if the same argument was

supplied to g directly. It is essential for library

features such as std::function

and std::thread which pass arguments to another (user

supplied) function.

Note that this is unique to template functions: we can't do this

with a non-template function such as h, since we don't

know whether the supplied argument is an lvalue or an rvalue. Within

a function that takes its arguments as rvalue references, the named

parameter is treated as an lvalue reference. Consequently the call

to g(t) from h always calls the lvalue

overload. If we changed the call

to g(std::forward<X>(t)) then it would always

call the rvalue-reference overload. The only way to do this with

"normal" functions is to create two overloads: one for lvalues and

one for rvalues.

Now imagine that we remove the overload of g for

rvalue references (delete line A). Calling f with an

rvalue (line 2) will now fail to compile because you can't

call g with an rvalue. On the other hand, our call

to h with an rvalue (line 3) will still

compile however, since it always calls the lvalue-reference

overload of g. This can lead to interesting problems

if g stores the reference for later use.

Further Reading

For more information, I suggest reading the accepted rvalue reference paper and "A Brief Introduction to Rvalue References", as well as the current C++0x working draft.

Posted by Anthony Williams

[/ cplusplus /] permanent link

Tags: rvalue reference, cplusplus, C++0x, forwarding

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Memory Models and Synchronization

Monday, 24 November 2008

I have read a couple of posts on memory models over the couple of weeks: one from Jeremy Manson on What Volatile Means in Java, and one from Bartosz Milewski entitled Who ordered sequential consistency?. Both of these cover a Sequentially Consistent memory model — in Jeremy's case because sequential consistency is required by the Java Memory Model, and in Bartosz' case because he's explaining what it means to be sequentially consistent, and why we would want that.

In a sequentially consistent memory model, there is a single total order of all atomic operations which is the same across all processors in the system. You might not know what the order is in advance, and it may change from execution to execution, but there is always a total order.

This is the default for the new C++0x atomics, and required for

Java's volatile, for good reason — it is

considerably easier to reason about the behaviour of code that uses

sequentially consistent orderings than code that uses a more relaxed

ordering.

The thing is, C++0x atomics are only sequentially consistent by default — they also support more relaxed orderings.

Relaxed Atomics and Inconsistent Orderings

I briefly touched on the properties of relaxed atomic operations in my presentation on The Future of Concurrency in C++ at ACCU 2008 (see the slides). The key point is that relaxed operations are unordered. Consider this simple example with two threads:

#include <thread>

#include <cstdatomic>

std::atomic<int> x(0),y(0);

void thread1()

{

x.store(1,std::memory_order_relaxed);

y.store(1,std::memory_order_relaxed);

}

void thread2()

{

int a=y.load(std::memory_order_relaxed);

int b=x.load(std::memory_order_relaxed);

if(a==1)

assert(b==1);

}

std::thread t1(thread1);

std::thread t2(thread2);

All the atomic operations here are using

memory_order_relaxed, so there is no enforced

ordering. Therefore, even though thread1 stores

x before y, there is no guarantee that the

writes will reach thread2 in that order: even if

a==1 (implying thread2 has seen the result

of the store to y), there is no guarantee that

b==1, and the assert may fire.

If we add more variables and more threads, then each thread may see a different order for the writes. Some of the results can be even more surprising than that, even with two threads. The C++0x working paper features the following example:

void thread1()

{

int r1=y.load(std::memory_order_relaxed);

x.store(r1,std::memory_order_relaxed);

}

void thread2()

{

int r2=x.load(std::memory_order_relaxed);

y.store(42,std::memory_order_relaxed);

assert(r2==42);

}

There's no ordering between threads, so thread1 might

see the store to y from thread2, and thus

store the value 42 in x. The fun part comes because the

load from x in thread2 can be reordered

after everything else (even the store that occurs after it in the same

thread) and thus load the value 42! Of course, there's no guarantee

about this, so the assert may or may not fire — we

just don't know.

Acquire and Release Ordering

Now you've seen quite how scary life can be with relaxed operations, it's time to look at acquire and release ordering. This provides pairwise synchronization between threads — the thread doing a load sees all the changes made before the corresponding store in another thread. Most of the time, this is actually all you need — you still get the "two cones" effect described in Jeremy's blog post.

With acquire-release ordering, independent reads of variables written independently can still give different orders in different threads, so if you do that sort of thing then you still need to think carefully. e.g.

std::atomicx(0),y(0); void thread1() { x.store(1,std::memory_order_release); } void thread2() { y.store(1,std::memory_order_release); } void thread3() { int a=x.load(std::memory_order_acquire); int b=y.load(std::memory_order_acquire); } void thread4() { int c=x.load(std::memory_order_acquire); int d=y.load(std::memory_order_acquire); }

Yes, thread3 and thread4 have the same

code, but I separated them out to make it clear we've got two separate

threads. In this example, the stores are on separate threads, so there

is no ordering between them. Consequently the reader threads may see

the writes in either order, and you might get a==1 and

b==0 or vice versa, or both 1 or both 0. The fun part is

that the two reader threads might see opposite

orders, so you have a==1 and b==0, but

c==0 and d==1! With sequentially consistent

code, both threads must see consistent orderings, so this would be

disallowed.

Summary

The details of relaxed memory models can be confusing, even for experts. If you're writing code that uses bare atomics, stick to sequential consistency until you can demonstrate that this is causing an undesirable impact on performance.

There's a lot more to the C++0x memory model and atomic operations than I can cover in a blog post — I go into much more depth in the chapter on atomics in my book.

Posted by Anthony Williams

[/ threading /] permanent link

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

First Review of C++ Concurrency in Action

Monday, 24 November 2008

A Dean Michael Berris has just published the first review of C++ Concurrency in Action that I've seen over on his blog. Thanks for your kind words, Dean!

C++ Concurrency in Action is not yet finished, but you can buy a copy now under the Manning Early Access Program and you'll get a PDF with the current chapters (plus updates as I write new chapters) and either a PDF or hard copy of the book (your choice) when it's finished.

Posted by Anthony Williams

[/ news /] permanent link

Tags: review, C++, cplusplus, concurrency, book

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

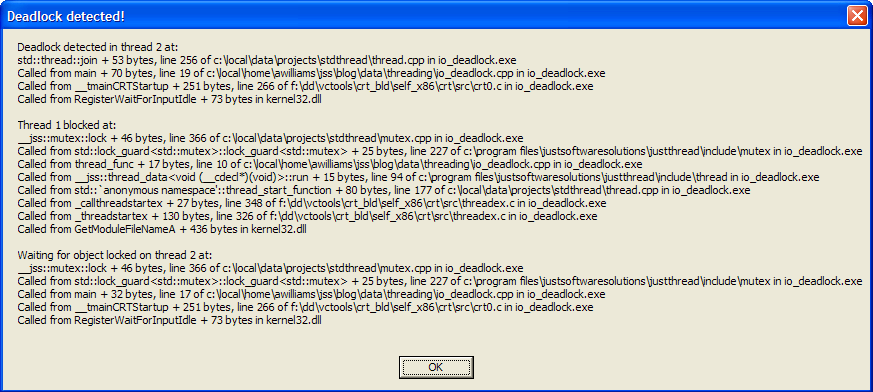

Deadlock Detection with just::thread

Wednesday, 12 November 2008

One of the biggest problems with multithreaded programming is the

possibility of deadlocks. In the excerpt from my

book published over at codeguru.com (Deadlock:

The problem and a solution) I discuss various ways of dealing with

deadlock, such as using std::lock when acquiring multiple

locks at once and acquiring locks in a fixed order.

Following such guidelines requires discipline, especially on large

code bases, and occasionally we all slip up. This is where the

deadlock detection mode of the

just::thread library comes in: if you compile your

code with deadlock detection enabled then if a deadlock occurs the

library will display a stack trace of the deadlock threads

and the locations at which the synchronization

objects involved in the deadlock were locked.

Let's look at the following simple code for an example.

#include <thread>

#include <mutex>

#include <iostream>

std::mutex io_mutex;

void thread_func()

{

std::lock_guard<std::mutex> lk(io_mutex);

std::cout<<"Hello from thread_func"<<std::endl;

}

int main()

{

std::thread t(thread_func);

std::lock_guard<std::mutex> lk(io_mutex);

std::cout<<"Hello from main thread"<<std::endl;

t.join();

return 0;

}

Now, it is obvious just from looking at the code that there's a

potential deadlock here: the main thread holds the lock on

io_mutex across the call to

t.join(). Therefore, if the main thread manages to lock

the io_mutex before the new thread does then the program

will deadlock: the main thread is waiting for thread_func

to complete, but thread_func is blocked on the

io_mutex, which is held by the main thread!

Compile the code and run it a few times: eventually you should hit the deadlock. In this case, the program will output "Hello from main thread" and then hang. The only way out is to kill the program.

Now compile the program again, but this time with

_JUST_THREAD_DEADLOCK_CHECK defined — you can

either define this in your project settings, or define it in the first

line of the program with #define. It must be defined

before any of the thread library headers are included

in order to take effect. This time the program doesn't hang —

instead it displays a message box with the title "Deadlock Detected!"

looking similar to the following:

Of course, you need to have debug symbols for your executable to get meaningful stack traces.

Anyway, this message box shows three stack traces. The first is

labelled "Deadlock detected in thread 2 at:", and tells us that the

deadlock was found in the call to std::thread::join from

main, on line 19 of our source file

(io_deadlock.cpp). Now, it's important to note that "line 19" is

actually where execution will resume when join returns

rather than the call site, so in this case the call to

join is on line 18. If the next statement was also on

line 18, the stack would report line 18 here.

The next stack trace is labelled "Thread 1 blocked at:", and tells

us where the thread we're trying to join with is blocked. In this

case, it's blocked in the call to mutex::lock from the

std::lock_guard constructor called from

thread_func returning to line 10 of our source file (the

constructor is on line 9).

The final stack trace completes the circle by telling us where that

mutex was locked. In this case the label says "Waiting for object

locked on thread 2 at:", and the stack trace tells us it was the

std::lock_guard constructor in main

returning to line 17 of our source file.

This is all the information we need to see the deadlock in this case, but in more complex cases we might need to go further up the call stack, particularly if the deadlock occurs in a function called from lots of different threads, or the mutex being used in the function depends on its parameters.

The just::thread deadlock

detection can help there too: if you're running the application from

within the IDE, or you've got a Just-in-Time debugger installed then

the application will now break into the debugger. You can then use the

full capabilities of your debugger to examine the state of the

application when the deadlock occurred.

Try It Out

You can download sample Visual C++ Express 2008 project for this

example, which you can use with our just::thread

implementation of the new C++0x thread library. The code should also

work with g++.

just::thread

doesn't just work with Microsoft Visual Studio 2008 — it's also

available for g++ 4.3 on Ubuntu Linux. Get your copy

today and try out the deadlock detection feature

risk free with our 30-day money-back guarantee.

Posted by Anthony Williams

[/ threading /] permanent link

Tags: multithreading, deadlock, c++

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Detect Deadlocks with just::thread C++0x Thread Library Beta V0.2

Saturday, 01 November 2008

I am pleased to announce that the second beta of just::thread, our C++0x Thread Library is

available, which now features deadlock detection for uses of

std::mutex. You can sign up at the just::thread Support

forum to download the beta or send an email to beta@stdthread.co.uk.

The just::thread library is a complete implementation

of the new C++0x thread library as per the current

C++0x working paper. Features include:

std::threadfor launching threads.- Mutexes and condition variables.

std::promise,std::packaged_task,std::unique_futureandstd::shared_futurefor transferring data between threads.- Support for the new

std::chronotime interface for sleeping and timeouts on locks and waits. - Atomic operations with

std::atomic. - Support for

std::exception_ptrfor transferring exceptions between threads. - New in beta 0.2: support for detecting deadlocks with

std::mutex

The library works with Microsoft Visual Studio 2008 or Microsoft Visual C++ 2008 Express for 32-bit Windows. Don't wait for a full C++0x compiler: start using the C++0x thread library today.

Sign up at the just::thread Support forum to download the beta.

Posted by Anthony Williams

[/ news /] permanent link

Tags: multithreading, concurrency, C++0x

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

just::thread C++0x Thread Library Beta V0.1 Released

Thursday, 16 October 2008

Update: just::thread was released on 8th January 2009. The just::thread C++0x thread library is currently available for purchase for Microsoft Visual Studio 2005, 2008 and 2010 for Windows and gcc 4.3, 4.4 and 4.5 for x86 Ubuntu Linux.

I am pleased to announce that just::thread, our C++0x Thread Library is now available as a beta release. You can sign up at the just::thread Support forum to download the beta or send an email to beta@stdthread.co.uk.

Currently, it only works with Microsoft Visual Studio 2008 or Microsoft Visual C++ 2008 Express for 32-bit Windows, though support for other compilers and platforms is in the pipeline.

Though there are a couple of limitations (such as the number of

arguments that can be supplied to a thread function, and the lack of

custom allocator support for std::promise), it is a

complete implementation of the new C++0x thread library as per the

current

C++0x working paper. Features include:

std::threadfor launching threads.- Mutexes and condition variables.

std::promise,std::packaged_task,std::unique_futureandstd::shared_futurefor transferring data between threads.- Support for the new

std::chronotime interface for sleeping and timeouts on locks and waits. - Atomic operations with

std::atomic. - Support for

std::exception_ptrfor transferring exceptions between threads.

Please sign up and download the beta today. The library should be going on sale by the end of November.

Please report bugs on the just::thread Support Forum or email to beta@stdthread.co.uk.

Posted by Anthony Williams

[/ news /] permanent link

Tags: multithreading, concurrency, C++0x

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

October 2008 C++ Standards Committee Mailing - New C++0x Working Paper, More Concurrency Papers Approved

Wednesday, 08 October 2008

The October 2008 mailing for the C++ Standards Committee was published today. This is a really important mailing, as it contains the latest edition of the C++0x Working Draft, which was put out as a formal Committee Draft at the September 2008 meeting. This means it is up for formal National Body voting and comments, and could in principle be the text of C++0x. Of course, there are still many issues with the draft and it will not be approved as-is, but it is "feature complete": if a feature is not in this draft it will not be in C++0x. The committee intends to fix the issues and have a final draft ready by this time next year.

Concurrency Papers

As usual, there's a number of concurrency-related papers that have been incorporated into the working draft. Some of these are from this mailing, and some from prior mailings. Let's take a look at each in turn:

- N2752: Proposed Text for Bidirectional Fences

- This paper modifies the wording for the use of fences in C++0x. It

is a new revision of N2731:

Proposed Text for Bidirectional Fences, and is the version voted

into the working paper. Now this paper has been accepted, fences are

no longer tied to specific atomic variables, but are represented by

the free functions

std::atomic_thread_fence()andstd::atomic_signal_fence(). This brings C++0x more in line with current CPU instruction sets, where fences are generally separate instructions with no associated object.std::atomic_signal_fence()just restricts the compiler's freedom to reorder variable accesses, whereasstd::atomic_thread_fence()will typically also cause the compiler to emit the specific synchronization instructions necessary to enforce the desired memory ordering. - N2782: C++ Data-Dependency Ordering: Function Annotation

- This is a revision of N2643:

C++ Data-Dependency Ordering: Function Annotation, and is the

final version voted in to the working paper. It allows functions to be

annotated with

[[carries_dependency]](using the just-accepted attributes proposal) on their parameters and return value. This can allow implementations to better-optimize code that usesstd::memory_order_consumememory ordering. - N2783: Collected Issues with Atomics

- This paper resolves LWG issues 818, 845, 846 and 864. This rewords

the descriptions of the memory ordering values to make it clear what

they mean, removes the

explicitqualification on thestd::atomic_xxxconstructors to allow implicit conversion on construction (and thus allow aggregate-style initialization), and adds simple definitions of the constructors for the atomic types (which were omitted by accident). - N2668: Concurrency Modifications to Basic String

- This has been under discussion for a while, but was finally

approved at the September meeting. The changes in this paper ensure

that it is safe for two threads to access the same

std::stringobject at the same time, provided they both perform only read operations. They also ensure that copying a string object and then modifying that copy is safe, even if another thread is accessing the original. This essentially disallows copy-on-write implementations since the benefits are now severely limited. - N2748: Strong Compare and Exchange

- This paper was in the previous mailing, and has now been

approved. In the previous working paper, the atomic

compare_exchangefunctions were allowed to fail "spuriously" even when the value of the object was equal to the comparand. This allows efficient implementation on a wider variety of platforms than otherwise, but also requires almost all uses ofcompare_exchangeto be put in a loop. Now this paper has been accepted, instead we provide two variants:compare_exchange_weakandcompare_exchange_strong. The weak variant allows spurious failure, whereas the strong variant is not allowed to fail spuriously. On architectures which provide the strong variant by default (such as x86) this would remove the need for a loop in some cases. - N2760: Input/Output Library Thread Safety

- This paper clarifies that unsynchronized access to I/O streams

from multiple threads is a data race. For most streams this means the

user is responsible for providing this synchronization. However, for

the standard stream objects (

std::cin,std::cout,std::cerrand friends) such external synchronization is only necessary if the user has calledstd::ios_base::sync_with_stdio(false). - N2775: Small library thread-safety revisions

- This short paper clarifies that the standard library functions may only access the data and call the functions that they are specified to do. This makes it easier to identify and eliminate potential data races when using standard library functions.

- N2671: An Asynchronous Future Value: Proposed Wording

- Futures are finally in C++0x! This paper from the June 2008

mailing gives us

std::unique_future<>,std::shared_future<>andstd::promise<>, which can be used for transferring the results of operations safely between threads. - N2709: Packaging Tasks for Asynchronous Execution

- Packaged Tasks are also in C++0x! This is my paper from the July

2008 mailing, which is the counterpart to N2671. A

std::packaged_task<F>is very similar to astd::function<F>except that rather than returning the result directly when it is invoked, the result is stored in the associated futures. This makes it easy to spawn functions with return values on threads, and provides a building block for thread pools.

Other Changes

The biggest change to the C++0x working paper is of course the acceptance of Concepts. There necessary changes are spread over a staggering 14 Concepts-related papers, all of which were voted in to the working draft at the September 2008 meeting.

C++0x now also has support for user-defined literals (N2765:

User-defined Literals (aka. Extensible Literals (revision 5))),

for default values of non-static data members to be

defined in the class definition (N2756:

Non-static data member initializers), and forward declaration of

enums (N2764:

Forward declaration of enumerations (rev. 3)).

Get Involved: Comment on the C++0x Draft

Please read the latest C++0x Working Draft and comment on it. If you post comments on this blog entry I'll see that the committee gets to see them, but I strongly urge you to get involved with your National Body: the only changes allowed to C++0x now are in response to official National Body comments. If you're in the UK, contact me and I'll put you in touch with the relevant people on the BSI panel.

Posted by Anthony Williams

[/ cplusplus /] permanent link

Tags: C++0x, C++, standards, concurrency

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

"Deadlock: The Problem and a Solution" Book Excerpt Online

Wednesday, 01 October 2008

An excerpt from my book C++ Concurrency in Action

has been published on CodeGuru. Deadlock:

the Problem and a Solution describes what deadlock is and how the

std::lock() function can be used to avoid it where

multiple locks can be acquired at once. There are also some simple

guidelines for avoiding deadlock in the first place.

The C++0x library facilities mentioned in the article

(std::mutex, std::lock(),

std::lock_guard and std::unique_lock) are

all available from the Boost

Thread Library in release 1.36.0 and later.

Posted by Anthony Williams

[/ news /] permanent link

Tags: multithreading, c++, deadlock, concurrency

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Implementing a Thread-Safe Queue using Condition Variables (Updated)

Tuesday, 16 September 2008

One problem that comes up time and again with multi-threaded code is how to transfer data from one thread to another. For example, one common way to parallelize a serial algorithm is to split it into independent chunks and make a pipeline — each stage in the pipeline can be run on a separate thread, and each stage adds the data to the input queue for the next stage when it's done. For this to work properly, the input queue needs to be written so that data can safely be added by one thread and removed by another thread without corrupting the data structure.

Basic Thread Safety with a Mutex

The simplest way of doing this is just to put wrap a non-thread-safe queue, and protect it with a mutex (the examples use the types and functions from the upcoming 1.35 release of Boost):

template<typename Data>

class concurrent_queue

{

private:

std::queue<Data> the_queue;

mutable boost::mutex the_mutex;

public:

void push(const Data& data)

{

boost::mutex::scoped_lock lock(the_mutex);

the_queue.push(data);

}

bool empty() const

{

boost::mutex::scoped_lock lock(the_mutex);

return the_queue.empty();

}

Data& front()

{

boost::mutex::scoped_lock lock(the_mutex);

return the_queue.front();

}

Data const& front() const

{

boost::mutex::scoped_lock lock(the_mutex);

return the_queue.front();

}

void pop()

{

boost::mutex::scoped_lock lock(the_mutex);

the_queue.pop();

}

};

This design is subject to race conditions between calls to empty, front and pop if there

is more than one thread removing items from the queue, but in a single-consumer system (as being discussed here), this is not a

problem. There is, however, a downside to such a simple implementation: if your pipeline stages are running on separate threads,

they likely have nothing to do if the queue is empty, so they end up with a wait loop:

while(some_queue.empty())

{

boost::this_thread::sleep(boost::posix_time::milliseconds(50));

}

Though the sleep avoids the high CPU consumption of a direct busy wait, there are still some obvious downsides to

this formulation. Firstly, the thread has to wake every 50ms or so (or whatever the sleep period is) in order to lock the mutex,

check the queue, and unlock the mutex, forcing a context switch. Secondly, the sleep period imposes a limit on how fast the thread

can respond to data being added to the queue — if the data is added just before the call to sleep, the thread

will wait at least 50ms before checking for data. On average, the thread will only respond to data after about half the sleep time

(25ms here).

Waiting with a Condition Variable

As an alternative to continuously polling the state of the queue, the sleep in the wait loop can be replaced with a condition

variable wait. If the condition variable is notified in push when data is added to an empty queue, then the waiting

thread will wake. This requires access to the mutex used to protect the queue, so needs to be implemented as a member function of

concurrent_queue:

template<typename Data>

class concurrent_queue

{

private:

boost::condition_variable the_condition_variable;

public:

void wait_for_data()

{

boost::mutex::scoped_lock lock(the_mutex);

while(the_queue.empty())

{

the_condition_variable.wait(lock);

}

}

void push(Data const& data)

{

boost::mutex::scoped_lock lock(the_mutex);

bool const was_empty=the_queue.empty();

the_queue.push(data);

if(was_empty)

{

the_condition_variable.notify_one();

}

}

// rest as before

};

There are three important things to note here. Firstly, the lock variable is passed as a parameter to

wait — this allows the condition variable implementation to atomically unlock the mutex and add the thread to the

wait queue, so that another thread can update the protected data whilst the first thread waits.

Secondly, the condition variable wait is still inside a while loop — condition variables can be subject to

spurious wake-ups, so it is important to check the actual condition being waited for when the call to wait

returns.

Be careful when you notify

Thirdly, the call to notify_one comes after the data is pushed on the internal queue. This avoids the

waiting thread being notified if the call to the_queue.push throws an exception. As written, the call to

notify_one is still within the protected region, which is potentially sub-optimal: the waiting thread might wake up

immediately it is notified, and before the mutex is unlocked, in which case it will have to block when the mutex is reacquired on

the exit from wait. By rewriting the function so that the notification comes after the mutex is unlocked, the

waiting thread will be able to acquire the mutex without blocking:

template<typename Data>

class concurrent_queue

{

public:

void push(Data const& data)

{

boost::mutex::scoped_lock lock(the_mutex);

bool const was_empty=the_queue.empty();

the_queue.push(data);

lock.unlock(); // unlock the mutex

if(was_empty)

{

the_condition_variable.notify_one();

}

}

// rest as before

};

Reducing the locking overhead

Though the use of a condition variable has improved the pushing and waiting side of the interface, the interface for the consumer

thread still has to perform excessive locking: wait_for_data, front and pop all lock the

mutex, yet they will be called in quick succession by the consumer thread.

By changing the consumer interface to a single wait_and_pop function, the extra lock/unlock calls can be avoided:

template<typename Data>

class concurrent_queue

{

public:

void wait_and_pop(Data& popped_value)

{

boost::mutex::scoped_lock lock(the_mutex);

while(the_queue.empty())

{

the_condition_variable.wait(lock);

}

popped_value=the_queue.front();

the_queue.pop();

}

// rest as before

};

Using a reference parameter to receive the result is used to transfer ownership out of the queue in order to avoid the exception

safety issues of returning data by-value: if the copy constructor of a by-value return throws, then the data has been removed from

the queue, but is lost, whereas with this approach, the potentially problematic copy is performed prior to modifying the queue (see

Herb Sutter's Guru Of The Week #8 for a discussion of the issues). This does, of

course, require that an instance Data can be created by the calling code in order to receive the result, which is not

always the case. In those cases, it might be worth using something like boost::optional to avoid this requirement.

Handling multiple consumers

As well as removing the locking overhead, the combined wait_and_pop function has another benefit — it

automatically allows for multiple consumers. Whereas the fine-grained nature of the separate functions makes them subject to race

conditions without external locking (one reason why the authors of the SGI

STL advocate against making things like std::vector thread-safe — you need external locking to do many common

operations, which makes the internal locking just a waste of resources), the combined function safely handles concurrent calls.

If multiple threads are popping entries from a full queue, then they just get serialized inside wait_and_pop, and

everything works fine. If the queue is empty, then each thread in turn will block waiting on the condition variable. When a new

entry is added to the queue, one of the threads will wake and take the value, whilst the others keep blocking. If more than one

thread wakes (e.g. with a spurious wake-up), or a new thread calls wait_and_pop concurrently, the while

loop ensures that only one thread will do the pop, and

the others will wait.

Update: As commenter David notes below, using multiple consumers does have one problem: if there are several

threads waiting when data is added, only one is woken. Though this is exactly what you want if only one item is pushed onto the

queue, if multiple items are pushed then it would be desirable if more than one thread could wake. There are two solutions to this:

use notify_all() instead of notify_one() when waking threads, or to call notify_one()

whenever any data is added to the queue, even if the queue is not currently empty. If all threads are notified then the extra

threads will see it as a spurious wake and resume waiting if there isn't enough data for them. If we notify with every

push() then only the right number of threads are woken. This is my preferred option: condition variable notify calls

are pretty light-weight when there are no threads waiting. The revised code looks like this:

template<typename Data>

class concurrent_queue

{

public:

void push(Data const& data)

{

boost::mutex::scoped_lock lock(the_mutex);

the_queue.push(data);

lock.unlock();

the_condition_variable.notify_one();

}

// rest as before

};

There is one benefit that the separate functions give over the combined one — the ability to check for an empty queue, and

do something else if the queue is empty. empty itself still works in the presence of multiple consumers, but the value

that it returns is transitory — there is no guarantee that it will still apply by the time a thread calls

wait_and_pop, whether it was true or false. For this reason it is worth adding an additional

function: try_pop, which returns true if there was a value to retrieve (in which case it retrieves it), or

false to indicate that the queue was empty.

template<typename Data>

class concurrent_queue

{

public:

bool try_pop(Data& popped_value)

{

boost::mutex::scoped_lock lock(the_mutex);

if(the_queue.empty())

{

return false;

}

popped_value=the_queue.front();

the_queue.pop();

return true;

}

// rest as before

};

By removing the separate front and pop functions, our simple naive implementation has now become a

usable multiple producer, multiple consumer concurrent queue.

The Final Code

Here is the final code for a simple thread-safe multiple producer, multiple consumer queue:

template<typename Data>

class concurrent_queue

{

private:

std::queue<Data> the_queue;

mutable boost::mutex the_mutex;

boost::condition_variable the_condition_variable;

public:

void push(Data const& data)

{

boost::mutex::scoped_lock lock(the_mutex);

the_queue.push(data);

lock.unlock();

the_condition_variable.notify_one();

}

bool empty() const

{

boost::mutex::scoped_lock lock(the_mutex);

return the_queue.empty();

}

bool try_pop(Data& popped_value)

{

boost::mutex::scoped_lock lock(the_mutex);

if(the_queue.empty())

{

return false;

}

popped_value=the_queue.front();

the_queue.pop();

return true;

}

void wait_and_pop(Data& popped_value)

{

boost::mutex::scoped_lock lock(the_mutex);

while(the_queue.empty())

{

the_condition_variable.wait(lock);

}

popped_value=the_queue.front();

the_queue.pop();

}

};

Posted by Anthony Williams

[/ threading /] permanent link

Tags: threading, thread safe, queue, condition variable

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

C++0x Draft and Concurrency Papers in the August 2008 Mailing

Thursday, 28 August 2008

Yesterday, the August 2008 C++ Standards Committee Mailing was published. This features a new Working Draft for C++0x, as well as quite a few other papers.

Thread-local storage

This draft incorporates N2659:

Thread-Local Storage, which was voted in at the June committee

meeting. This introduces a new keyword: thread_local

which can be used to indicate that each thread will have its own copy

of an object which would otherwise have static storage duration.

thread_local int global;

thread_local std::string constructors_allowed;

void foo()

{

struct my_class{};

static thread_local my_class block_scope_static;

}

As the example above shows, objects with constructors and

destructors can be declared thread_local. The constructor

is called (or other initialization done) before the first use of such

an object by a given thread. If the object is used on a given thread

then it is destroyed (and its destructor run) at thread exit. This is

a change from most common pre-C++0x implementations, which exclude

objects with constructors and destructors.

Additional concurrency papers

This mailing contains several papers related to concurrency and multithreading in C++0x. Some are just rationale or comments, whilst others are proposals which may well therefore be voted into the working draft at the September meeting. The papers are listed in numerical order.

- N2731: Proposed Text for Bidirectional Fences

- This is a revised version of N2633:

Improved support for bidirectional fences,

which incorporates naming changes requested by the committee at the

June meeting, along with some modifications to the memory model. In

particular, read-modify-write operations (such as

exchangeorfetch_add) that use thememory_order_relaxedordering can now feature as part of a release sequence, thus increasing the possibilities for usingmemory_order_relaxedoperations in lock-free code. Also, the definition of how fences that usememory_order_seq_cstinteract with othermemory_order_seq_cstoperations has been clarified. - N2744: Comments on Asynchronous Future Value Proposal

- This paper is a critique of N2671:

An Asynchronous Future Value: Proposed Wording. In short, the

suggestions are:

- that

shared_future<T>::get()should return by value rather than by const reference; - that

promiseobjects are copyable; - and that the

promisefunctions for setting the value and exception be overloaded with versions that return an error code rather than throwing an exception on failure.

- that

- N2745: Example POWER Implementation for C/C++ Memory Model

- This paper discusses how the C++0x memory model and atomic operations can be implemented on systems based on the POWER architecture. As a consequence, this also shows how the different memory orderings can affect the actual generated code for atomic operations.

- N2746: Rationale for the C++ working paper definition of "memory location"

- This paper is exactly what it says: a rationale for the definition

of "memory location". Basically, it discusses the reasons why every

object (even those of type

char) is a separate memory location, even though this therefore requires that memory be byte-addressable, and restricts optimizations on some architectures. - N2748: Strong Compare and Exchange

- In the current working paper, the atomic

compare_exchangefunctions are allowed to fail "spuriously" even when the value of the object was equal to the comparand. This allows efficient implementation on a wider variety of platforms than otherwise, but also requires almost all uses ofcompare_exchangeto be put in a loop. This paper proposes that instead we provide two variants:compare_exchange_weakandcompare_exchange_strong. The weak variant would be the same as the current version, whereas the strong variant would not be allowed to fail spuriously. On architectures which provide the strong variant by default (such as x86) this would remove the need for a loop in some cases.

Posted by Anthony Williams

[/ cplusplus /] permanent link

Tags: c++0x, concurrency

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

The Intel x86 Memory Ordering Guarantees and the C++ Memory Model

Tuesday, 26 August 2008

The July 2008 version of the Intel 64 and IA-32 Architecture documents includes the information from the memory ordering white paper I mentioned before. This makes it clear that on x86/x64 systems the preferred implementation of the C++0x atomic operations is as follows (which has been confirmed in discussions with Intel engineers):

| Memory Ordering | Store | Load |

|---|---|---|

| std::memory_order_relaxed | MOV [mem],reg | MOV reg,[mem] |

| std::memory_order_acquire | n/a | MOV reg,[mem] |

| std::memory_order_release | MOV [mem],reg | n/a |

| std::memory_order_seq_cst | XCHG [mem],reg | MOV reg,[mem] |

As you can see, plain MOV is enough for even

sequentially-consistent loads if a LOCKed instruction

such as XCHG is used for the sequentially-consistent

stores.

One thing to watch out for is the Non-Temporal SSE instructions

(MOVNTI, MOVNTQ, etc.), which by their

very nature (i.e. non-temporal) don't follow the normal

cache-coherency rules. Therefore non-temporal stores must be

followed by an SFENCE instruction in order for their

results to be seen by other processors in a timely fashion.

Additionally, if you're writing drivers which deal with memory pages marked WC (Write-Combining) then additional fence instructions will be required to ensure visibility between processors. However, if you're programming with WC pages then this shouldn't be a problem.

Posted by Anthony Williams

[/ threading /] permanent link

Tags: intel, x86, c++, threading, memory ordering, memory model

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

"Simpler Multithreading in C++0x" Article Online

Thursday, 21 August 2008

My latest article, Simpler Multithreading in C++0x is now available as part of DevX.com's Special Report on C++0x.

The article provides a whistle-stop tour of the new C++0x multithreading support.

Posted by Anthony Williams

[/ news /] permanent link

Tags: multithreading, C++0x

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Boost 1.36.0 has been Released!

Tuesday, 19 August 2008

Verson 1.36.0 of the Boost libraries was released last week. Crucially, this contains the fix for the critical bug in the win32 implementation of condition variables found in the 1.35.0 release.

There are a few other changes to the Boost.Thread library: there are now functions for acquiring multiple locks without deadlock, for example.

There are of course new libraries to try, and other libraries have been updated too. See the full Release Notes for details, or just Download the release and give it a try.

Posted by Anthony Williams

[/ news /] permanent link

Tags: boost, C++

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

C++ Concurrency in Action Early Access Edition Available

Wednesday, 13 August 2008

As those of you who attended my talk on The Future of Concurrency in C++ at ACCU 2008 (or read the slides) will know, I'm writing a book on concurrency in C++: C++ Concurrency in Action: Practical Multithreading, due to be published next year.

Those nice folks over at Manning have made it available through their Early Access Program so you can start reading without having to wait for the book to be finished. By purchasing the Early Access Edition, you will get access to each chapter as it becomes available as well as your choice of a hard copy or Ebook when the book is finished. Plus, if you have any comments on the unfinished manuscript I may be able to take them into account as I revise each chapter. Currently, early drafts of chapters 1, 3, 4 and 5 are available.

I will be covering all aspects of multithreaded programming with the new C++0x standard, from the details of the new C++0x memory model and atomic operations to managing threads and designing parallel algorithms and thread-safe containers. The book will also feature a complete reference to the C++0x Standard Thread Library.

Posted by Anthony Williams

[/ news /] permanent link

Tags: C++, concurrency, multithreading

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

July 2008 C++ Standards Committee Mailing Published

Wednesday, 30 July 2008

The July 2008 mailing for the C++ Standards

Committee was published today. Primarily this is just an update on the "state of evolution" papers, and the issue

lists. However, there are a couple of new and revised papers. Amongst them is my revised paper on packaged_task: N2709: Packaging Tasks for Asynchronous Execution.

As I mentioned when the most recent C++0x draft

was published, futures are still under discussion,

and the LWG requested that packaged_task be moved to a separate paper, with a few minor changes. N2709 is this separate paper. Hopefully the LWG will

approve this paper at the September meeting of the C++ committee in San Francisco; if they don't, then packaged_task

will join the long list of proposals that have missed the boat for C++0x.

Posted by Anthony Williams

[/ cplusplus /] permanent link

Tags: C++0x, C++, standards

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

How Search Engines See Keywords

Friday, 25 July 2008

Jennifer Laycock's recent post on How Search Engines See Keywords over at Search Engine Guide really surprised me. It harks back to the 1990s, with talk of keyword density, and doesn't match my understanding of modern search engines at all. It especially surprised me given the author: I felt that Jennifer was pretty savvy about these things. Maybe I'm just missing something really crucial.

Anyway, my understanding is that the search engines index each and every word on your page, and store a count of each word and phrase. If you say "rubber balls" three times, it doesn't matter if you also say "red marbles" three times: the engines don't assign "keywords" to a page, they find pages that match what the user types. This is why if I include a random phrase on a web page exactly once, and then search for that phrase then my page will likely show up in the results (assuming my phrase was sufficiently uncommon), even though other phrases might appear more often on the same page.

Once the engine has found the pages that contain the phrase that users have searched for (whether in content, or in links to that page), the search engine then ranks those pages to decide what to show. The ranking will use things like the number of times the phrase appears on the page, whether it appears in the title, in headings, links, <strong> tags or just in plain text, how many other pages link to that page with that phrase, and all the usual stuff.

Here, let's put it to the test. At the time of writing, a search on Google for "wibble flibble splodge bucket" with quotes returns no results, and a search without quotes returns just three entries. Given Google's crawl rate for my website, I expect this blog entry will turn up in the search results for that phrase within a few days, even though it only appears the once and other phrases such as "search engines" appear far more often. Of course, I may be wrong, but only time will tell.

Posted by Anthony Williams

[/ webdesign /] permanent link

Tags: seo, search engines, keywords

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Review of the Windows Installer XML (WiX) Toolset

Tuesday, 15 July 2008

A couple of weeks ago, one of the products I maintain for a client needed a new installer. This application is the server part of a client-server suite, and runs as a Service on Microsoft Windows. The old installer was built using the simple installer-builder that is part of Microsoft Visual Studio, and could not easily be extended to handle the desired functionality. Enter the WiX toolset.

As its name suggests, the Windows Installer XML Toolset is a set of tools for building Windows Installer (MSI) packages, using XML to describe the installer. At first glance, this doesn't look much easier than populating the MSI tables directly using Microsoft's Orca tool, but it's actually really straightforward, especially with the help of the WiX tutorial.

It's all about the <XML>

The perceived complexity comes from several factors. First up,

every individual file, directory and registry entry that is to be

installed must be specified as a <Component> and

given a GUID which can be used to uniquely identify that item. The

details of what to install where, combined with the inherent verbosity

of XML makes the installer build file quite large. Thankfully, being

XML, whitespace such as indenting and blank lines can be used to

separate things into logical groups, and XML comments can be used if

necessary. The installer GUI is also built using XML, which can make

things very complicated. Thankfully WiX comes with a set of

pre-designed GUIs which can be referenced from your installer build

file — you can even provide your own images in order to brand

the installer with your company logo, for example.

Once you've got over the initial shock of the XML syntax, the

toolkit is actually really easy to use. The file and directory

structure to be used by the installed application is described using

nested XML elements to mimic the directory structure, with special

predefined directory identifiers for things like the Windows

directory, the Program Files directory or the user's My Documents

folder. You can also use nested <Feature> tags to

create a tree of features which the user can choose to install (or

not) if they opt for a custom install. Each "feature" is a group of

one or more components which are identified with nested tags in the

feature tags.

Custom Actions

What if you're not just installing a simple desktop application?

Windows Installer provides support for custom actions which can be run

as part of the installation, and WiX allows you to specify

these. The old installer for the server application used custom

actions to install the service, but these weren't actually necessary