Blog Archive for / 2015 / 07 /

10th Anniversary Sale - last few days

Wednesday, 29 July 2015

The last few days of our 10th Anniversary Sale are upon us. Just::Thread and Just::Thread Pro are available for 50% off the normal price until 31st July 2015.

Just::Thread is our implementation of the C++11 and C++14 thread libraries, for Windows, Linux and MacOSX. It also includes some of the extensions from the upcoming C++ Concurrency TS, with more to come shortly.

Just::Thread Pro is our add-on library which provides an Actor framework for easier concurrency, along with concurrent data structures: a thread-safe queue, and concurrent hash map, and a wrapper for ensuring synchronized access to single objects.

All licences include a free upgrade to point releases, so if you purchase now you'll get a free upgrade to all 2.x releases.

Coming soon in v2.2

The V2.2 release of Just::Thread will be out soon. This will include the new facilities from the Concurrency TS:

Some features from the concurrency TS are already in V2.1:

All customers with V2.x licenses, including those purchased during the sale, will get a free upgrade to V2.2 when it is released.

Posted by Anthony Williams

[/ news /] permanent link

Tags: sale

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

std::shared_ptr's secret constructor

Friday, 24 July 2015

std::shared_ptr has a secret: a constructor that most users don't even know

exists, but which is surprisingly useful. It was added during the lead-up to the

C++11 standard, so wasn't in the TR1 version of shared_ptr, but it's been

shipped with gcc since at least gcc 4.3, and with Visual Studio since Visual

Studio 2010, and it's been in Boost since at least v1.35.0.

This constructor doesn't appear in most tutorials on std::shared_ptr. Nicolai

Josuttis devotes half a page to this constructor in the second edition of The

C++ Standard Library, but Scott Meyers doesn't even mention it in his item on

std::shared_ptr in Effective Modern C++.

So: what is this constructor? It's the aliasing constructor.

Aliasing shared_ptrs

What does this secret constructor do for us? It allows us to construct a new

shared_ptr instance that shares ownership with another shared_ptr, but which

has a different pointer value. I'm not talking about a pointer value that's just

been cast from one type to another, I'm talking about a completely different

value. You can have a shared_ptr<std::string> and a shared_ptr<double> share

ownership, for example.

Of course, only one pointer is ever owned by a given set of shared_ptr

objects, and only that pointer will be deleted when the objects are

destroyed. Just because you can create a new shared_ptr that holds a different

value, you don't suddenly get the second pointer magically freed as well. Only

the original pointer value used to create the first shared_ptr will be

deleted.

If your new pointer values don't get freed, what use is this constructor? It

allows you to pass out shared_ptr objects that refer to subobjects and keep

the parent alive.

Sharing subobjects

Suppose you have a class X with a member that is an instance of some class Y:

struct X{

Y y;

};Now, suppose you have a dynamically allocated instance of X that you're

managing with a shared_ptr<X>, and you want to pass the Y member to a

library that takes a shared_ptr<Y>. You could construct a shared_ptr<Y> that

refers to the member, with a do-nothing deleter, so the library doesn't actually

try and delete the Y object, but what if the library keeps hold of the

shared_ptr<Y> and our original shared_ptr<X> goes out of scope?

struct do_nothing_deleter{

template<typename> void operator()(T*){}

};

void store_for_later(std::shared_ptr<Y>);

void foo(){

std::shared_ptr<X> px(std::make_shared<X>());

std::shared_ptr<Y> py(&px->y,do_nothing_deleter());

store_for_later(py);

} // our X object is destroyedOur stored shared_ptr<Y> now points midway through a destroyed object, which

is rather undesirable. This is where the aliasing constructor comes in: rather

than fiddling with deleters, we just say that our shared_ptr<Y> shares

ownership with our shared_ptr<X>. Now our shared_ptr<Y> keeps our X object

alive, so the pointer it holds is still valid.

void bar(){

std::shared_ptr<X> px(std::make_shared<X>());

std::shared_ptr<Y> py(px,&px->y);

store_for_later(py);

} // our X object is kept aliveThe pointer doesn't have to be directly related at all: the only requirement is

that the lifetime of the new pointer is at least as long as the lifetime of the

shared_ptr objects that reference it. If we had a new class X2 that held a

dynamically allocated Y object we could still use the aliasing constructor to

get a shared_ptr<Y> that referred to our dynamically-allocated Y object.

struct X2{

std::unique_ptr<Y> y;

X2():y(new Y){}

};

void baz(){

std::shared_ptr<X2> px(std::make_shared<X2>());

std::shared_ptr<Y> py(px,px->y.get());

store_for_later(py);

} // our X2 object is kept aliveThis could be used for classes that use the pimpl idiom, or trees where you

want to be able to pass round pointers to the child nodes, but keep the whole

tree alive. Or, you could use it to keep a shared library alive as long as a

pointer to a variable stored in that library was being used. If our class X

loads the shared library in its constructor and unloads it in the destructor,

then we can pass round shared_ptr<Y> objects that share ownership with our

shared_ptr<X> object to keep the shared library from being unloaded until all

the shared_ptr<Y> objects have been destroyed or reset.

The details

The constructor signature looks like this:

template<typename Other,typename Target>

shared_ptr(shared_ptr<Other> const& other,Target* p);As ever, if you're constructing a shared_ptr<T> then the pointer p must be

convertible to a T*, but there's no restriction on the type of Other at

all. The newly constructed object shares ownership with other, so

other.use_count() is increased by 1, and the value returned by get() on the

new object is static_cast<T*>(p).

There's a slight nuance here: if other is an empty shared_ptr, such as a

default-constructed shared_ptr, then the new shared_ptr is also empty, and

has a use_count() of 0, but it has a non-NULL value if p was not

NULL.

int i;

shared_ptr<int> sp(shared_ptr<X>(),&i);

assert(sp.use_count()==0);

assert(sp.get()==&i);Whether this odd effect has any use is open to debate.

Final Thoughts

This little-known constructor is potentially very useful for passing around

shared_ptr objects that reference parts of a non-trivial data structure and

keep the whole data structure alive. Not everyone will need it, but for those

that do it will avoid a lot of headaches.

Posted by Anthony Williams

[/ cplusplus /] permanent link

Tags: shared_ptr, cplusplus

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

All the world's a stage... C++ Actors from Just::Thread Pro

Wednesday, 15 July 2015

Handling shared mutable state is probably the single hardest part of writing multithreaded code. There are lots of ways to address this problem; one of the common ones is the actors metaphor. Going back to Hoare's Communicating Sequential Processes, the idea is simple - you build your program out of a set of actors that send each other messages. Each actor runs normal sequential code, occasionally pausing to receive incoming messages from other actors. This means that you can analyse the behaviour of each actor independently; you only need to consider which messages might be received at each receive point. You could treat each actor as a state machine, with the messages triggering state transitions.

This is how Erlang processes work: each process is an actor, which runs independently from the other processes, except that they can send messages to each other. Just::thread Pro: Actors Edition adds library facilities to support this to C++. In the rest of this article I will describe how to write programs that take advantage of it. Though the details will differ, the approach can be used with other libraries that provide similar facilities, or with the actor support in other languages.

Simple Actors

Actors are embodied in the

jss::actor

class. You pass in a function or other callable object (such as a lambda

function) to the constructor, and this function is then run on a background

thread. This is exactly the same as for

std::thread,

except that the destructor waits for the actor thread to finish, rather than

calling std::terminate.

void simple_function(){

std::cout<<"simple actor\n";

}

int main(){

jss::actor actor1(simple_function);

jss::actor actor2([]{

std::cout<<"lambda actor\n";

});

}The waiting destructor is nice, but it's really a side issue — the main benefit of actors is the ability to communicate using messages rather than shared state.

Sending and receiving messages

To send a message to an actor you just call the

send()

member function on the actor object, passing in whatever message you wish to

send. send() is a function template, so you can send any type of message

— there are no special requirements on the message type, just that it is a

MoveConstructible type. You can also use the stream-insertion operator to send

a message, which allows easy chaining e.g.

actor1.send(42);

actor2.send(MyMessage("some data"));

actor2<<Message1()<<Message2();Sending a message to an actor just adds it to the actor's message queue. If the

actor never checks the message queue then the message does nothing. To check the

message queue, the actor function needs to call the

receive()

static member function of

jss::actor. This

is a static member function so that it always has access to the running actor,

anywhere in the code — if it were a non-static member function then you

would need to ensure that the appropriate object was passed around, which would

complicate interfaces, and open up the possibility of the wrong object being

passed around, and lifetime management issues.

The call to jss::actor::receive() will then block the actor's thread until a

message that it can handle has been received. By default, the only message type

that can be handled is jss::stop_actor. If a message of this type is sent to

an actor then the receive() function will throw a jss::stop_actor

exception. Uncaught, this exception will stop the actor running. In the

following example, the only output will be "Actor running", since the actor will

block at the receive() call until the stop message is sent, and when the message

arrives, receive() will throw.

void stoppable_actor(){

std::cout<<"Actor running"<<std::endl;

jss::actor::receive();

std::cout<<"This line is never run"<<std::endl;

}

int main(){

jss::actor a1(stoppable_actor);

std::this_thread::sleep_for(std::chrono::seconds(1));

a1.send(jss::stop_actor());

}Sending a "stop" message is common-enough that there's a special member function

for that too:

stop(). "a1.stop()"

is thus equivalent to "a1.send(jss::stop_actor())".

Handling a message of another type requires that you tell the receive() call

what types of message you can handle, which is done by chaining one or more

calls to the

match()

function template. You must specify the type of the message to handle, and then

provide a function to call if the message is received. Any messages other than

jss::stop_actor not specified in a match() call will be removed from the queue,

but otherwise ignored. In the following example, only messages of type "int" and

"std::string" are accepted; the output is thus:

Waiting

42

Waiting

Hello

Waiting

DoneHere's the code:

void simple_receiver(){

while(true){

std::cout<<"Waiting"<<std::endl;

jss::actor::receive()

.match<int>([](int i){std::cout<<i<<std::endl;})

.match<std::string>([](std::string const&s){std::cout<<s<<std::endl;});

}

}

int main(){

{

jss::actor a(simple_receiver);

a.send(true);

a.send(42);

a.send(std::string("Hello"));

a.send(3.141);

a.send(jss::stop_actor());

} // wait for actor to finish

std::cout<<"Done"<<std::endl;

}It is important to note that the receive() call will block until it receives

one of the messages you have told it to handle, or a jss::stop_actor message,

and unexpected messages will be removed from the queue and discarded. This means

the actors don't accumulate a backlog of messages they haven't yet said they can

handle, and you don't have to worry about out-of-order messages messing up a

receive() call.

These simple examples have just had main() sending messages to the actors. For

a true actor-based system we need them to be able to send messages to each

other, and reply to messages. Let's take a look at how we can do that.

Referencing one actor from another

Suppose we want to write a simple time service actor, that sends the current

time back to any other actor that asks it for the time. At first thought it

looks rather simple: write a simple loop that handles a "time request" message,

gets the time, and sends a response. It won't be that much different from our

simple_receiver() function above:

struct time_request{};

void time_server(){

while(true){

jss::actor::receive()

.match<time_request>([](time_request r){

auto now=std::chrono::system_clock::now();

????.send(now);

});

}

}The problem is, we don't know which actor to send the response to — the

whole point of this time server is that it will respond to a message from any

other actor. The solution is to pass the sender as part of the message. We could

just pass a pointer or reference to the jss::actor instance, but that requires

that the actor knows the location of its own controlling object, which makes it

more complicated — none of the examples we've had so far could know that,

since the controlling object is a local variable declared in a separate

function. What is needed instead is a simple means of identifying an actor,

which the actor code can query — an actor reference. The type of an actor

reference is

jss::actor_ref,

which is implicitly constructible from a jss::actor. An actor can also obtain

a reference to itself by calling

jss::actor::self(). jss::actor_ref

has a

send()

member function and stream insertion operator for sending messages, just like

jss::actor. So, we can put the sender of our time_request message in the message

itself as a jss::actor_ref data member, and use that when sending the response.

struct time_request{

jss::actor_ref sender;

};

void time_server(){

while(true){

jss::actor::receive()

.match<time_request>([](time_request r){

auto now=std::chrono::system_clock::now();

r.sender<<now;

});

}

}

void query(jss::actor_ref server){

server<<time_request{jss::actor::self()};

jss::actor::receive()

.match<std::chrono::system_clock::time_point>(

[](std::chrono::system_clock::time_point){

std::cout<<"time received"<<std::endl;

});

}Dangling references

If you use jss::actor_ref then you have to be prepared for the case that the

referenced actor might have stopped executing by the time you send the

message. In this case, any attempts to send a message through the

jss::actor_ref instance will throw an exception of type

[jss::no_actor]http://www.stdthread.co.uk/prodoc/headers/actor/no_actor.html. To

be robust, our time server really ought to handle that too — if an

unhandled exception of any type other than jss::stop_actor escapes the actor

function then the library will call std::terminate. We should therefore wrap the

attempt to send the message in a try-catch block.

void time_server(){

while(true){

jss::actor::receive()

.match<time_request>([](time_request r){

auto now=std::chrono::system_clock::now();

try{

r.sender<<now;

} catch(jss::no_actor&){}

});

}

}We can now set up a pair of actors that play ping-pong:

struct pingpong{

jss::actor_ref sender;

};

void pingpong_player(std::string message){

while(true){

try{

jss::actor::receive()

.match<pingpong>([&](pingpong msg){

std::cout<<message<<std::endl;

std::this_thread::sleep_for(std::chrono::milliseconds(50));

msg.sender<<pingpong{jss::actor::self()};

});

}

catch(jss::no_actor&){

std::cout<<"Partner quit"<<std::endl;

break;

}

}

}

int main(){

jss::actor ping(pingpong_player,"ping");

jss::actor pong(pingpong_player,"pong");

ping<<pingpong{pong};

std::this_thread::sleep_for(std::chrono::seconds(1));

ping.stop();

pong.stop();

}This will give output along the lines of the following:

ping

pong

ping

pong

ping

pong

ping

pong

ping

pong

ping

pong

ping

pong

ping

pong

ping

pong

ping

pong

Partner quitThe sleep in the player's message handler is to slow everything down — if

you take it out then messages will go back and forth as fast as the system can

handle, and you'll get thousands of lines of output. However, even at full speed

the pings and pongs will be interleaved, because sending a message synchronizes

with the receive() call that receives it.

That's essentially all there is to it — the rest is just application design. As an example of how it can all be put together, let's look at an implementation of the classic sleeping barber problem.

The Lazy Barber

For those that haven't met it before, the problem goes like this: Mr Todd runs a barber shop, but he's very lazy. If there are no customers in the shop then he likes to go to sleep. When a customer comes in they have to wake him up if he's asleep, take a seat if there is one, or come back later if there are no free seats. When Mr Todd has cut someone's hair, he must move on to the next customer if there is one, otherwise he can go back to sleep.

The barber actor

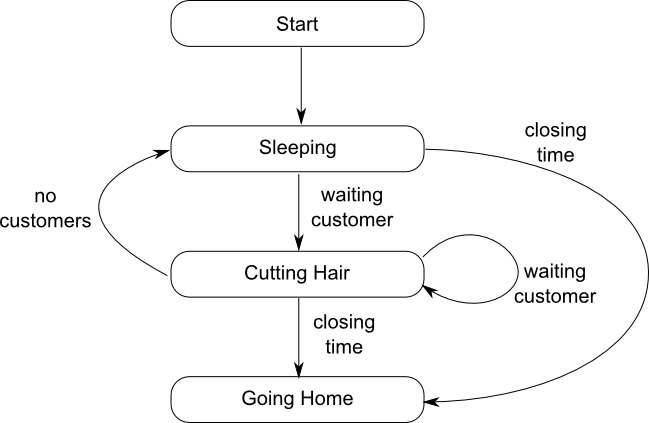

Let's start with the barber. He sleeps in his chair until a customer comes in, then wakes up and cuts the customer's hair. When he's done, if there is a waiting customer he cuts that customer's hair. If there are no customers, he goes back to sleep, and finally at closing time he goes home. This is shown as a state machine in figure 1.

This translates into code as shown in listing 1.The wait

loops for "sleeping" and "cutting hair" have been combined, since almost the

same set of messages is being handled in each case — the only difference

is that the "cutting hair" state also has the option of "no customers", which

cannot be received in the "sleeping" state, and would be a no-op if it was. This

allows the action associated with the "cutting hair" state to be entirely

handled in the lambda associated with the customer_waiting message; splitting

the wait loops would require that the code was extracted out to a separate

function, which would make it harder to keep count of the haircuts. Of course,

if you don't have a compiler with lambda support then you'll need to do that

anyway. The logger is a global actor that receives std::strings as messages

and writes them to std::cout. This avoids any synchronization issues with

multiple threads trying to write out at once, but it does mean that you have to

pre-format the strings, such as when logging the number of haircuts done in the

day. The code for this is shown in listing 2.

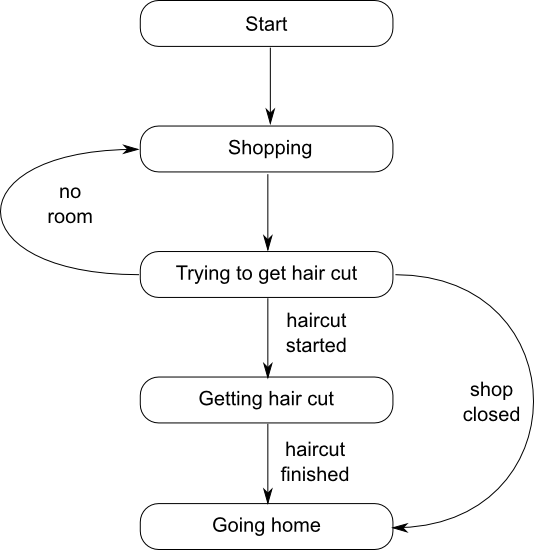

The customer actor

Let's look at things from the other side: the customer. The customer goes to town, and does some shopping. Each customer periodically goes into the barber shop to try and get a hair cut. If they manage, or the shop is closed, then they go home, otherwise they do some more shopping and go back to the barber shop later. This is shown in the state machine in figure 2.

This translates into the code in listing 3. Note that the customer

interacts with a "shop" actor that I haven't mentioned yet. It is often

convenient to have an actor that represents shared state, since this allows

access to the shared state from other actors to be serialized without needing an

explicit mutex. In this case, the shop holds the number of waiting customers,

which must be shared with any customers that come in, so they know whether there

is a free chair or not. Rather than have the barber have to deal with messages

from new customers while he is cutting hair, the shop acts as an

intermediary. The customer also has to handle the case that the shop has already

closed, so the shop reference might refer to an actor that has finished

executing, and thus get a jss::no_actor exception when trying to send

messages.

The message handlers for the shop are short, and just send out further messages to the barber or the customer, which is ideal for a simple state-manager — you don't want other actors waiting to perform simple state checks because the state manager is performing a lengthy operation; this is why we separated the shop from the barber. The shop has 2 states: open, where new customers are accepted provided there are fewer than the remaining spaces, and closed, where new customers are turned away, and the shop is just waiting for the last customer to leave. If a customer comes in, and there is a free chair then a message is sent to the barber that there is a customer waiting; if there is no space then a message is sent back to the customer to say so. When it's closing time then we switch to the "closing" state — in the code we exit the first while loop and enter the second. This is all shown in listing 4.

The messages are shown in listing 5, and the main() function that drives it all is in listing 6.

Exit stage left

There are of course other ways of writing code to deal with any particular scenario, even if you stick to using actors. This article has shown some of the issues that you need to think about when using an actor-based approach, as well as demonstrating how it all fits together with the Just::Thread Pro actors library. Though the details will be different, the larger issues will be common to any implementation of the actor model.

Get the code

If you want to download the code for a better look, or to try it out, you can download it here.

Get your copy of Just:::Thread Pro

If you like the idea of working with actors in your code, now is the ideal time to get Just::Thread Pro. Get your copy now.

This blog post is based on an article that was printed in the July 2013 issue of CVu, the Journal of the ACCU.

Posted by Anthony Williams

[/ threading /] permanent link

Tags: cplusplus, actors, concurrency, multithreading

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

New Concurrency Features in C++14

Wednesday, 08 July 2015

It might have been out for 7 months already, but the C++14 standard is still pretty fresh. The changes include a couple of enhancements to the thread library, so I thought it was about time I wrote about them here.

Shared locking

The biggest of the changes is the addition of

std::shared_timed_mutex. This

is a multiple-reader, single-writer mutex. This means that in addition to the

single-ownership mode supported by the other standard mutexes, you can also lock

it in shared ownership mode, in which case multiple threads may hold a shared

ownership lock at the same time.

This is commonly used for data structures that are read frequently but modified only rarely. When the data structure is stable, all threads that want to read the data structure are free to do so concurrently, but when the data structure is being modified then only the thread doing the modification is permitted to access the data structure.

The timed part of the name is analagous to

std::timed_mutex

and

std::recursive_timed_mutex:

timeouts can be specified for any attempt to acquire a lock, whether a

shared-ownership lock or a single-ownership lock. There is a proposal to add a

plain std::shared_mutex to the next standard, since this can have lower

overhead on some platforms.

To manage the new shared ownership mode there are a new set of member functions:

lock_shared(),

try_lock_shared(),

unlock_shared(),

try_lock_shared_for()

and

try_lock_shared_until(). Obtaining

and releasing the single-ownership lock is performed with the same set of

operations as for std::timed_mutex.

However, it is generally considered bad practise to use these functions directly

in C++, since that leaves the possibility of dangling locks if a code path

doesn't release the lock correctly. It is better to use

std::lock_guard

and

std::unique_lock

to perform lock management in the single-ownership case, and the

shared-ownership case is no different: the C++14 standard also provides a new

std::shared_lock

class template for managing shared ownership locks. It works just the same as

std::unique_lock; for common use cases it acquires the lock in the

constructor, and releases it in the destructor, but there are member functions

to allow alternative access patterns.

Typical uses will thus look like the following:

std::shared_timed_mutex m;

my_data_structure data;

void reader(){

std::shared_lock<std::shared_timed_mutex> lk(m);

do_something_with(data);

}

void writer(){

std::lock_guard<std::shared_timed_mutex> lk(m);

update(data);

}Performance warning

The implementation of a mutex that supports shared ownership is inherently more

complex than a mutex that only supports exclusive ownership, and all the

shared-ownership locks still need to modify the mutex internals. This means that

the mutex itself can become a point of contention, and sap performance. Whether

using a std::shared_timed_mutex instead of a std::mutex provides better or

worse performance overall is therefore strongly dependent on the work load and

access patterns.

As ever, I therefore strongly recommend profiling your application with

std::mutex and std::shared_timed_mutex in order to ascertain which performs

best for your use case.

std::chrono enhancements

The other concurrency enhancements in the C++14 standard are all in the

<chrono> header. Though this isn't strictly about concurrency, it is used for

all the timing-related functions in the concurrency library, and is therefore

important for any threaded code that has timeouts.

constexpr all round

The first change is that the library has been given a hefty dose of constexpr

goodness. Instances of std::chrono::duration and std::chrono::time_point now

have constexpr constructors and simple arithmetic operations are also

constexpr. This means you can now create durations and time points which are

compile-time constants. It also means they are literal types, which is

important for the other enhancement to <chrono>: user-defined literals for

durations.

User-defined literals

C++11 introduced the idea of user-defined literals, so you could provide a

suffix to numeric and string literals in your code to construct a user-defined

type of object, much as 3.2f creates a float rather than the default

double, however there were no new types of literals provided by the standard

library.

C++14 changes that. We now have user-defined literals for

std::chrono::duration, so you can write 30s instead of

std::chrono::seconds(30). To get this user-defined literal goodness you need

to explicitly enable it in your code with a using directive — you might have

other code that wants to use these suffixes for a different set of types, so the

standard let's you choose.

That using directive is:

using namespace std::literals::chrono_literals;The supported suffixes are:

h→std::chrono::hoursmin→std::chrono::minutess→std::chrono::secondsms→std::chrono::millisecondsus→std::chrono::microsecondsns→std::chrono::nanoseconds

You can therefore wait for a shared ownership lock with a 50 millisecond timeout like this:

void foo(){

using namespace std::literals::chrono_literals;

std::shared_lock<std::shared_timed_mutex> lk(m,50ms);

if(lk.owns_lock()){

do_something_with_lock_held();

}

else {

do_something_without_lock_held();

}

}Just::Thread support

As you might expect, Just::Thread provides an

implementation of all of this. std::shared_timed_mutex is available on all

supported platforms, but the constexpr and user-defined literal enhancements

are only available for those compilers that support the new language features:

gcc 4.6 or later for constexpr and gcc 4.7 or later for user-defined

literals, with the -std=c++11 or std=c++14 switch enabled in either case.

Get your copy of Just::Thread while

our 10th anniversary sale is on for a 50% discount.

Posted by Anthony Williams

[/ threading /] permanent link

Tags: cplusplus, concurrency

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

10th Anniversary Sale

Wednesday, 01 July 2015

We started Just Software Solutions Ltd in June 2005, so we've now been in business for 10 years. We're continuing to thrive, with both our consulting, training and development services, and sales of Just::Thread doing well.

To let our customers join in with our celebrations, we're running a month long sale: for the whole of July 2015, Just::Thread and Just::Thread Pro will be available for 50% off the normal price.

Just::Thread is our implementation of the C++11 and C++14 thread libraries, for Windows, Linux and MacOSX. It also includes some of the extensions from the upcoming C++ Concurrency TS, with more to come shortly.

Just::Thread Pro is our add-on library which provides an Actor framework for easier concurrency, along with concurrent data structures: a thread-safe queue, and concurrent hash map, and a wrapper for ensuring synchronized access to single objects.

All licences include a free upgrade to point releases, so if you purchase now you'll get a free upgrade to all 2.x releases.

Posted by Anthony Williams

[/ news /] permanent link

Tags: sale

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Design and Content Copyright © 2005-2025 Just Software Solutions Ltd. All rights reserved. | Privacy Policy