Blog Archive for / general /

Cryptography and Society

Tuesday, 05 May 2015

Politicians in both the UK and USA have been making moves towards banning secure encryption over the last few months. With the UK general election coming on Thursday I wanted to express why I think this is a seriously bad idea.

Context

Back in January there were some terrorist attacks in Paris. These attacks were and are a serious matter, and stopping such attacks in future should be something that governments concern themselves with.

However, one aspect of the response by politicians has been to call for securely encrypted communication outlawed. In particular, the British Prime Minister, David Cameron, asked

"In our country, do we want to allow a means of communication between people which, even in extemis with a signed warrant from the Home Secretary personally, that we cannot read?"

It was clear from the context that he thinks the answer is a resounding "NO", and from the further actions of politicians both here and in the USA it appears that others in government agree with that point of view. They clearly believe that the government should be able to read all communications.

I think in a fair, open, democratic society, the answer must be "YES": private individuals must be able to communicate without risk of eavesdropping by government officials.

Secure Encryption is Not New

Firstly, there have ALWAYS been means of communication between people that the government cannot read. You might be able to intercept a letter, and read the words written on the piece of paper, but if the message is not in the words as they appear, then you cannot read it.

Ciphers which could not be cracked by contemporary eavesdroppers have been used since at least the time of the Roman Empire. New technology merely provides a new set of such ciphers.

For example, the proper use of a one-time pad provides completely secure encryption. This technique has been in use since 1882, if not earlier.

Other technology in widespread use today "merely" makes it exceedingly difficult to break the cipher, requiring hundreds, thousands or even millions of years to crack with a brute-force method. These time periods are enough that these ciphers can be considered uncrackable for all intents and purposes.

Consequently, governments are powerless to actually prevent communication that cannot be read by the security services. All that can be done is to make it hard for the average citizen to use such communication.

Terrorists are Criminals

By their very nature, terrorists are criminals: terrorist acts themselves are illegal, and even the possession of the weapons used for the terrorist acts is often also illegal.

Therefore, terrorists will not be put off from using secure communication just because that too is illegal.

In particular, criminal organisations will not think twice about using whatever means is available to ensure that their communications are private: if something gives them an edge of the police or anyone else who would seek to stop them, they will use it.

Society relies on secure encryption

Secure encrypted communication doesn't just prevent government agencies reading the communications of criminals, it also prevents criminals reading the communications of ordinary citizens.

This website, in common with an increasingly large number of websites, uses HTTPS for all traffic. When properly configured this means that someone intercepting the website traffic cannot identify which pages on the website you visited, or extract any of the data sent by you as a visitor to the website, or by the website back to you.

This is crucial for facilities such as online banking: it prevents computer criminals from obtaining passwords and account data by intercepting the communications between you and your bank. If such communications could not be relied upon to be secure then online banking would not be viable, as the potential for fraud due to stolen passwords would be too great.

Likewise, many businesses use secure Virtual Private Networks (VPNs) which rely on secure encryption to transfer data between computers that are only connected to each other via the internet. This allows them to securely transfer data between sites, or between remote workers, without worrying about the communications being intercepted by criminals. Without secure encryption, many large multi-national businesses would be hugely impacted, as they wouldn't be able to rely on transferring data across the internet safely, and would instead have to rely on physical transfer via courier.

A "back door" or "government secret key" destroys the security of encryption

Some of the proposals from politicians have been to require that companies that provide encryption services must also provide a means whereby government security services can also decrypt the communications if required.

This requires that either (a) the company in question keeps a database of all the encryption/decryption keys used for all communications, or (b) the encryption algorithm used allows for decryption via a "back door" or "secret key" in addition to the standard decryption key, so that the government security services can gain access if required, without needing to know the customer's decryption key.

Keeping a database of the decryption keys just provides a direct target for attack by computer criminals. Once such a database is breached, none of the communications provided by that company can be considered secure. This is clearly not a good state of affairs, given the number of times that password databases get compromised.

That leaves option (b): providing a "back door" or "secret key", or other means whereby an otherwise-encrypted communication can be read by the security services. However, this fundamentally compromises that encryption.

Knowing that the back door exists, criminal computer crackers will work to ensure that they too can gain access to the communication, and they won't wait for a warrant from the Home Secretary or whatever government department is responsible for issuing such warrants! Any such group that does manage to obtain access would probably not make it public knowledge, they would merely use it to ensure that they could access communications that were relevant to them, whether that was because they had a direct use for the information, or because it could be sold to other criminal organisations.

If there is a single key that can decrypt all communication using a given system then that dramatically reduces the computation effort required to break the key: the larger the number of messages that are transmitted with a given key, the easier it is to identify the key, especially if you have access to the raw unencrypted message. The huge volume of electronic communications in use today would mean that the secret back door key would be much more readily compromised than any individual encryption key.

Privacy is a human right

The Universal Declaration of Human Rights was adopted by the UN in 1948. Article 12 states:

No one shall be subjected to arbitrary interference with his privacy, family, home or correspondence, nor to attacks upon his honour and reputation. Everyone has the right to the protection of the law against such interference or attacks.

Secure encrypted communication protects our correspondence from interference, including interference by the government.

Restricting the use of encryption is also a violation of the right to freedom of expression, guaranteed to us by article 19 of the Universal Declaration of Human Rights:

Everyone has the right to freedom of opinion and expression; this right includes freedom to hold opinions without interference and to seek, receive and impart information and ideas through any media and regardless of frontiers.

The restriction on our freedom of expression is easy to see: if I have true freedom of expression then I can impart any series of letters or numbers to anyone without interference. If that series of letters or numbers happens to be an encrypted message then that is of no consequence. Any attempt to limit the use of particular encryption algorithms therefore limits my ability to send whatever message I like, since particular sequences of letters and numbers are outlawed purely because of their meaning.

Human rights organisations such as Amnesty International use secure encrypted communications to communicate with their workers. If those communications could not be secured against interference then this would have a detrimental impact on their ability to do their humanitarian work, and could endanger their workers.

Encryption is mathematics

Computer encryption is just a mathematical algorithm applied to a series of numbers. It is ridiculous to consider that performing mathematical operations on a sequence of numbers could be outlawed merely because that sequence of numbers has meaning to someone.

End note

I strongly object to any move to restrict the use of encryption technology. It is technologically and morally unsound, with little or no upside and considerable downsides.

I urge politicians to likewise oppose any moves to restrict the use of encryption technology, and I urge those standing in the elections in the UK this week to make it known to their potential constituents that they will oppose such measures.

Finally, I think we should be encouraging the use of strong encryption rather than discouraging it, to protect us from those who would intercept our digital communication and use that for their gain and our detriment.

Posted by Anthony Williams

[/ general /] permanent link

Tags: cryptography, encryption, politics

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Firefox is losing market share to Chrome

Monday, 09 March 2015

I read with interest an article on Computerworld about Firefox losing market share, wondering what people were using instead. Unsurprisingly, the answer seems to be Chrome: apparently Chrome now has a 27.6% share compared to Firefox's 11.8%. That's quite a big difference.

I checkout out the stats for this site for February 2015, and the figures bear it out: 30.7% of visitors use Chrome vs 14.9% Firefox and 12.8% Safari. Amusingly, 3.1% of visitors still use IE6!

What I did find interesting is the version numbers people are using: there were visitors using every version of Chrome from version 2 to version 43, and the same for Firefox — someone was even using Firefox 0.10! I'm a bit surprised by this, as I'd have thought that users of these browsers were probably amongst the most likely to upgrade.

Why the drop?

The big question of course is why the shift? I switched to Firefox because Internet Explorer was poor, and I've stuck with it, mainly through inertia, but I've used other browsers over the years, and still prefer Firefox. I've got Chrome installed on my desktop, but I don't particularly like it, and only really use it for cross-browser testing. I only really use it on my tablets, where it is the only browser I have installed — I tried Firefox for Android and was really disappointed.

Maybe that's the cause of the shift: everyone is using mobile devices for browsing, and Chrome/Safari are better than the others for mobile.

Which browser(s) do you use, and why?

Posted by Anthony Williams

[/ general /] permanent link

Tags: firefox, chrome, browsers

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Gotchas Upgrading Apache from 2.2 to 2.4

Wednesday, 10 December 2014

I finally got round to upgrading one of my servers from Ubuntu 12.04 (the previous LTS release) to Ubuntu 14.04 (the latest LTS release). One of the consequences of this is that Apache gets upgraded from 2.2 to 2.4. Sadly, the upgrade wasn't as smooth as I'd hoped, so I'm posting this here in case anyone else has the same problem that I did.

Oh no! It's all broken!

It's nice to upgrade for various reasons — not least of which being the support benefits of being on the most recent LTS release — except after the upgrade, several of the websites hosted on the server stopped working. Rather than getting the usual web page, they just returned an "access denied" error page, and in the case of the https pages they just returned an SSL error. This was not good, and led to much frantic checking of config files.

After verifying that all the config files for all the sites were indeed correct,

I finally resorted to googling the problem. It turns out that the default

apache2.conf file was being used, as all the "important" config was in the

module config files, or the site config files, so the upgrade had just replaced

it with the new one.

Whereas the old v2.2 default config file has the line

Include sites-enabled/The new v2.4 default config file has the line

IncludeOptional sites-enabled/*.confA Simple Fix

This caused problems with my server because many of the config files were named

after the website (e.g. example.com) and did not have a .conf

suffix. Renaming the files to e.g example.com.conf fixed the problem, as would

have changing the line in apache2.conf so it didn't force the suffix.

Access Control

The other major change is to the access control directives. Old Allow and

Deny directives are replaced with new Require directives. The

access_compat module is intended to allow the old directives to work as

before, but it's probably worth checking if you use any in your website

configurations.

Exit Stage Left

Thankfully, all this was happening on the staging server, so the websites weren't down while I investigated. Testing is important — what was supposed to be just a simple upgrade turned out not to be, and without a staging server the websites would have been down for the duration.

Posted by Anthony Williams

[/ general /] permanent link

Tags: apache

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Migrating to https

Thursday, 30 October 2014

Having intended to do so for a while, I've finally migrated our main website to https. All existing links should still work, but will redirect to the https version of the page.

Since this whole process was not as simple as I'd have liked it to be, I thought I'd document the process for anyone else who wants to do this. Here's the quick summary:

- Buy an SSL certificate

- Install it on your web server

- Set up your web server to serve https as well as http

- Ensure that any external scripts or embedded images used by the website are retrieved with https

- Redirect the http version to https

Now let's dig in to the details.

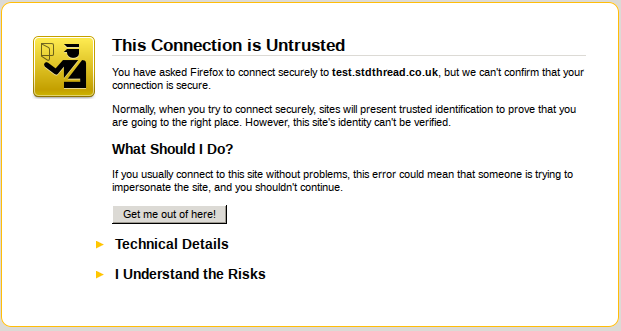

1. Buy an SSL certificate

For testing purposes, a self-signed certificate works fine. However, if you want to have people actually use the https version of your website then you will need to get a certificate signed by a recognized certificate authority. If you don't then your visitors will be presented with a certificate warning when they view the site, and will probably go somewhere else instead.

The SSL certificate used on https://www.justsoftwaresolutions.co.uk was purchased from GarrisonHost, but there are plenty of other certificate providers available.

Purchasing a certificate is not merely a matter of entering payment details on a web form. You may well need to provide proof of who you are and/or company registration certificates in order to get the purchase approved. Once that has happened, you will need to get your certificate signed and install it on your web server.

2. Install the SSL certificate on your web server

In order to install the certificate on your web server, it first has to be

signed by the certification authority so that it is tied to your web

server. This requires a Certificate Signing Request (CSR) generated on your web

server. With luck, your certification provider will give you nice instructions.

In most cases, you're probably looking at the openssl req command, something

like:

openssl req -new -newkey rsa:2048 -nodes -out common.csr \

-keyout common.key \

-subj "/C=GB/ST=Your State or County/L=Your City/O=Your Company/OU=Your \

Department/CN=www.yourcompany.com"This will give you a private key (common.key) and a CSR file

(common.csr). Keep the private key private, since this is what identifies

the web server as your web server, and give the CSR file to your certificate

provider.

Your certificate provider will then give you a certificate file, which is your web server certificate, and possibly a certificate chain file, which provides the signing chain from your certificate back to one of the widely-known root certificate providers. The certificate chain file will be identical for anyone who purchased a certificate signed by the same provider.

You now need to put three files on your web server:

- your private key file,

- your certificate file, and

- the certificate chain file.

Ensure that the permissions on these only allow the user running the web server to access them, especially the private key file.

You now need to set up your web server to use them.

3. Set up your web server to serve https as well as http

I'm only going to cover apache here, since that's what is used for https://www.justsoftwaresolutions.co.uk; if you're using something else then you'll have to check the documentation.

Firstly, you'll need to ensure that mod_ssl is installed and enabled. Run

sudo a2enmod sslon your web server. If it complains that "module ssl does not exist" then follow your platform's documentation to get it installed and try again. On Ubuntu it is part of the basic apache installation.

Now you need a new virtual host file for https. Create one in the

sites-available directory with the following contents:

<IfModule mod_ssl.c>

<VirtualHost *:443>

ServerAdmin webmaster@yourdomain.com

ServerName yourdomain.com

ServerAlias www.yourdomain.com

SSLEngine On

SSLCertificateFile /path/to/certificate.crt

SSLCertificateKeyFile /path/to/private.key

SSLCertificateChainFile /path/to/certificate/chain.txt

# Handle shutdown in broken browsers

BrowserMatch "MSIE [2-6]" \

nokeepalive ssl-unclean-shutdown \

downgrade-1.0 force-response-1.0

BrowserMatch "MSIE [17-9]" ssl-unclean-shutdown

DocumentRoot /path/to/ssl/host/files

<Directory "/path/to/ssl/host/files">

# directory-specific apache directives

</Directory>

# Pass SSL_* environment variables to scripts

<FilesMatch "\.(cgi|shtml|phtml|php)$">

SSLOptions +StdEnvVars

</FilesMatch>

</VirtualHost>

</IfModule>This is a basic configuration: you'll also want to ensure that any configuration directives you need for your website are present.

You'll also want to edit the config for mod_ssl. Open up

mods-available/ssl.conf from your apache config directory, and find the

SSLCipherSuite, SSLHonorCipherOrder and SSLProtocol directives. Update

them to the following:

SSLHonorCipherOrder on

SSLCipherSuite ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:ECDH+3DES:DH+3DES:RSA+AESGCM:RSA+AES:RSA+3DES:!aNULL:!MD5:!DSS

SSLProtocol all -SSLv2 -SSLv3

SSLCompression offThis disables the protocols and ciphers that are known to be insecure at the time of writing. If you're reading this much after publication, or wish to be certain, please do a web search for the latest secure cipher lists, or check a resource such as https://wiki.mozilla.org/Security/Server_Side_TLS.

After all these changes, restart the web server:

sudo apache2ctl restartYou should now be able to visit your website with https:// instead of

http:// as the protocol. Obviously, the content will only be the same if

you've set it up to be.

Now the web server is running, you can check the security using an SSL checker like https://sslcheck.globalsign.com, which will test for available cipher suites, and ensure that your web server is not vulnerable to known attacks.

Now you need to ensure that everything works correctly when accessed through https. One of the big issues is embedded images and external scripts.

4. Ensure that https is used for everything on https pages

If you load a web page with https, and that page loads images or scripts using http, your browser won't be happy. At the very least, the nice "padlock" icon that indicates a secure site will not show, but you may get a popup, and the insecure images or scripts may not load at all. None of this leads to a nice visitor experience.

It is therefore imperative that on a web page viewed with https all images and scripts are loaded with https.

The good news is that relative URLs inherit the protocol, so an image URL of "/images/foo.png" will use https on an https web page. The bad news is that on a reasonably sized web site there's probably quite a few images and scripts with full URLs that specify plain http. Not least, because things like blog entries that may be read in a feed reader often need to specify full URLs for embedded images to ensure that they show up correctly in the reader.

If all the images and scripts are on servers you control, then the you can ensure that those servers support https (with this guide), and then switch to https in the URLs for those resources. For servers outside your control, you need to check that https is supported, which can be an issue.

Aside: you could make the URLs use https on https pages and http on http pages by omitting the protocol, so "http://example.com/images/foo.png" would become "//example.com/images/foo.png". However, using https on plain http pages is fine, and it is generally better to use https where possible. It's also more straightforward.

If the images or scripts are on external servers which you do not control, and which do not support https then you can use a proxy wrapper like camo to avoid the "insecure content" warnings. However, this still requires changing the URLs.

For static pages, you can do a simple search and replace, e.g.

sed -i -e 's/src="http:/src="https:/g' *.htmlHowever, if your pages are processed through a tool like MarkDown, or stored in a CMS then you might not have that option. Instead, you'll have to trawl through the links manually, which could well be rather tedious. There are websites that will tell you which items on a given page are insecure, and you can read the warnings in your browser, but you've still got to check each page and edit the URLs manually.

While you're doing this, it's as well to check that everything else works

correctly. I found that a couple of aspects of the blog engine needed adjusting

to work correctly with https due to minor changes in the VirtualHost settings.

When you've finally done that, you're ready to permanently switch to https.

5. Redirect the http version to https

This is by far the easiest part of the whole process. Open the apache config for the plain http virtual host and add one line:

Redirect permanent / https://www.yourdomain.comThis will redirect http://www.yourdomain.com/some/path to

https://www.yourdomain.com/some/path with a permanent (301) redirect. All

existing links to your web site will now redirect to the equivalent page with

https.

When you've done that then you can also enable Strict Transport Security. This ensures that when someone connects to your website then they get a header that says "always use https for this site". This prevents anyone intercepting plain http connections (e.g. on public wifi) and attacking your visitors that way.

You do this by enabling mod_headers, and then updating the https virtual

host. Run the following on your web server:

sudo a2enmod headersand then add the following line to the virtual host file you created above for https:

Header always set Strict-Transport-Security "max-age=63072000; includeSubDomains"Then you will need to restart apache:

sudo apache2ctl restartThis will ensure that any visitor that now visits your website will always use https if they visit again within 2 years from the same computer and browser. Every time they visit, the clock is reset for another 2 years.

You can probably delete much of the remaining settings from this virtual host config, since everything is being redirected, but there is little harm in leaving it there for now.

All done

That's all there is to it. Relatively straightforward, but some parts are more involved than one might like.

Posted by Anthony Williams

[/ general /] permanent link

Tags: https, ssl, http

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Memories of Learning C

Monday, 17 October 2011

Herb Sutter's latest blog entry invites us to share our memories of our first C program, in tribute to Dennis Ritchie. I can't remember what my first C program was, but I thought I'd write about my memories of learning C.

I studied Physics at college, and there was very little programming taught as part of the course. That didn't bother me though; I'd taught myself to program up until then, and I wasn't going to stop now. The big benefit I got from computing at college was access to the internet, and access to C and C++ compilers. I could program in BASIC, Pascal and a couple of forms of assembly language, and I'd eagerly read Stan Lippman's C++ Primer and written out (on paper!) some C++ code, but I hadn't yet had a C++ compiler to try out my programs on.

I wrote several C++ programs before I even considered writing a

plain C program, but I probably typed in and compiled the

classic printf("hello world\n"); C program to check

everything was working before I compiled any C++.

Usenet

My strongest memories about learning C are about learning from usenet. Though I had access to C compilers at college, access to experts was not so readily available unless you were studying computing. With access to the internet, I didn't need local experts though — usenet provided access to experts from across the world. I read comp.lang.c and comp.lang.c++ avidly, and taught myself both languages together. The usenet community was invaluable to me. The wealth of knowledge that people had, and their willingness to share with newbies was something I really appreciated.

I remember struggling over file handling, and getting the arguments

to scanf right; I remember puzzling over the poor

performance of a program and having someone kindly point out that my

code was doing malloc and free calls in a

tight loop. Though I tend to answer more questions than I ask these

days, I still hang out on newgroups such as comp.lang.c++ today. It

seems that for many

people StackOverflow has

replaced usenet as the place to go for help, but the old-style

newsgroups are still valuable.

Ubiquity

Back then, C++ compilers were in their infancy. Templates didn't work on every compiler, there was no STL, and many platforms didn't have a working C++ compiler at all. I consequently wrote a lot of C — every platform had a C compiler, and my C code would work on the college PCs, my PC (when I saved up enough to buy one), the University's Unix machine, and the Physics department workstations. The same could not be said for C++.

The ubiquity of C is something I still appreciate today, and this

is only possible because Dennis Ritchie designed his language to be

portable to multiple platforms. Though "implementation defined"

behaviour can be frustrating when the implementation defines it a

different way to how you would like, it is this that enables the

portability. You want to write code for a DSP that only handles

32-bit data? Fine:

make char, short, int

and long all 32-bits. What if your machine has 9-bit

bytes? No problem: just make char 9 bits, and

everything else a convenient multiple of that.

C is the basic lingua-franca of the computing world. It is a "portable assembly language". These days I use C++ where I can because it allows a higher level of abstraction, and easier expression of intent without compromising on the performance you'd get with plain C, but it's not as portable, and wouldn't be possible without C.

The computing world owes a lot to Dennis Ritchie.

Posted by Anthony Williams

[/ general /] permanent link

Tags: C, nostalgia

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Computer Education

Wednesday, 12 October 2011

Andrew Hague's post on Computer Education in Britain touches on something I've been discussing with my wife recently.

The travesty of ICT

Our children do not get taught anything at school about how computers work, or how to program them, and are unlikely to. Andrew says he found that "the study of computer science for British children ends at about age 11." This doesn't tally with my experience of primary schools — they are never taught any computer science. Local secondary schools are proud of their ICT suites, with office programs and image editing programs galore, but not a single class on the basics of computers and programming.

Part of the problem is the complexity of modern computers. Whereas the computers we grew up with (the Dragon 32, BBC Micro, ZX Spectrum, Commodore 64, etc.) were simple beasts, and booted into a programming environment (BASIC), modern computers are complex beasts with a swish graphical OS with no native programming environment. Yes, you can use Javascript in a browser, or the macro language in office programs, but it's not the same. PCs do not invite programming the same way that the older computers did, and you have to go out of your way to provide a basic programming environment.

Schools could overcome this hurdle, and provide programming environments, but they don't. Instead they teach everyone how to use the latest versions of office programs, despite the fact that next year's release will have a different UI, and different capabilities. Yes, children need to be computer-savvy, due to the prevalence of computers in everyday life, but they don't need to be experts in using word processors. Rather, they should be taught how to learn to use the programs, the things that are common about them (e.g. menus), how to get help (the help menu, Google), and so forth, and then taught about how computers work. Yes, use a word processor for writing the occasional thing in English, or use a spreadsheet for doing some data analysis in Geography, but the "computing" lessons should be about programming and how computers work at the basic level, rather than how to use popular software.

I think this lack of teaching about the basics of computing has a wider effect, as well as the lack of new programmers. The computer is something that people don't understand, but which they rely on. This can give people a sense of powerlessness, especially when it does something unexpected. I've had to help people who've been all in a panic because they "lost their work". It didn't appear in the list that was presented in the "open file" dialog, so it was "lost". Somehow they had saved it in a different directory, and their lack of understanding about the file system meant they didn't know how to find it, and panicked — the computer that they relied on had "lost" their important work. Teaching about the basics of modern operating systems (rather than the specifics of the software package being used) would have alleviated this fear.

Addressing the Problem

So, what is to be done? Firstly, as programming parents we can teach our children about computers and programming, which is something that my wife and I have started doing. But beyond that, we need to make the schools, colleges and government aware of the issues.

Andrew points to the Computing at School working group and the Behind the Screen project, both of which seem promising. However, without support these projects will fizzle, and our children will continue to be taught how to use office software rather than computing principles.

Posted by Anthony Williams

[/ general /] permanent link

Tags: computing, education, schools

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Importing an Existing Windows XP Installation into VirtualBox

Wednesday, 03 June 2009

On Monday morning one of the laptops I use for developing software died. Not a complete "nothing happens when I turn it on" kind of death — it still runs the POST checks OK — but it won't rebooted of its own accord whilst compiling some code and now no longer boots into Windows (no boot device, apparently). Now, I really didn't fancy having to install everything from scratch, and I've become quite a big fan of VirtualBox recently, so I thought I'd import it into VirtualBox. How hard could it be? The answer, I discovered, was "quite hard".

Since it seems that several other people have tried to import an existing Windows XP installation into VirtualBox and had problems doing so, I thought I'd document what I did for the benefits of anyone who is foolish enough to try this in the future.

Step 1: Clone the Disk into VirtualBox

The first thing to do is clone the disk into VirtualBox. I have a handy laptop disk caddy lying around in my office which enables you to convert a laptop hard disk into an external USB drive, so I took the disk out of the laptop and put it in that. I connected the drive to my linux box, and mounted the partition. A quick look round seemed to indicate that the partition was in working order and the data intact. So far so good. I unmounted the partition again, in preparation for cloning the disk.

I started VirtualBox and created a new virtual machine with a virtual disk the same size as the physical disk. I then booted the VM with the System Rescue CD that I use for partitioning and disk backups. You might prefer to use another disk cloning tool.

Once the VM was up and running, I connected the USB drive to the VM using VirtualBox's USB support and cloned the physical disk onto the virtual one. This took a long time, considering it was only a 30Gb disk. Someone will probably tell me that there are quicker ways of doing this, but it worked, and I hope I don't have to do it again.

Step 2: Try (and fail) to boot Windows

Once the clone was complete, I disconnected the USB drive and unmapped the System Rescue CD and rebooted the VM. Windows started to boot, but would hang on the splash screen. If you're trying this and Windows now boots in your VM, be very glad.

Booting into safe mode showed that the hang occurred after loading "mup.sys". It seems lots of people have had this problem, and mup.sys is not the problem — the problem is that the hardware drivers configured for the existing Windows installation don't match the VirtualBox emulated hardware in some drastic fashion. This is not surprising if you think about it. Anyway, like I said, lots of people have had this problem, and there are lots of suggested ways of fixing it, like booting with the Windows Recovery Console and adjusting which drivers are loaded, using the "repair" version of the registry and so forth. I tried most of them, and none worked. However, there was one suggestion that seemed worth following through, and it was a variant of this that I finally got working.

Step 3: Install Windows into a new VM

The suggestion that I finally got working was to install Windows on a new VM and swipe the SYSTEM registry hive from there. This registry hive contains all the information about your hardware that Windows needs to boot, so if you can get Windows booting in a VM then you can use the corresponding SYSTEM registry hive to boot the recovered installation. At least that's the theory; in practice it needs a bit of hackery to make it work.

Anyway, I installed Windows into the new VM, closed it down rebooted it with the System Rescue CD to retrieve the SYSTEM registry hive: C:\Windows\System32\config\SYSTEM. You cannot access this file when the system is running. I then booted my original VM with the System Rescue CD and copied the registry hive over, making sure I had a backup of the original. If you're doing this yourself don't change the hive on your original VM yet.

The system now booted into Windows. Well, almost — it booted up, but then displayed an LSASS message about being unable to update the password and rebooted. This cycle repeats ad infinitum, even in Safe Mode. So far not so good.

Step 4: Patching the SYSKEY

In practice, Windows installations have what's called a SYSKEY in order to prevent unauthorized people logging on to the system. This is a checksum of some variety which is spread across the SAM registry hive (which contains the user details), the SYSTEM hive and the SECURITY hive. If the SYSKEY is wrong, the system will boot up, but then display the message I was getting about LSASS being unable to update the password and reboot. In theory, you should be able to update all three registry hives together, but then all your user accounts get replaced, and I didn't fancy trying to get everything set up right again. This is where the hackery comes in, and where I am thankful to two people: firstly Clark from http://www.beginningtoseethelight.org/ who wrote an informative article on the Windows Security Accounts Manager which explains how the SYSKEY is stored in the registry hives, and secondly Petter Nordahl-Hagen who provides a boot disk for offline Windows password and registry editing.

According to the article on the Windows Security Manager, the portion of the SYSKEY store in the SYSTEM hive is stored as class key values on a few registry keys. Class key values are hidden from normal registry accesses, but Petter Nordahl-Hagen's registry editor can show them to you. So, I restored the original SYSTEM hive (I was glad I made a backup) and booted the VM from Petter's boot disk and looked at the class key values on the ControlSet001\Control\Lsa\Data, ControlSet001\Control\Lsa\GBG, ControlSet001\Control\Lsa\JD and ControlSet001\Control\Lsa\Skew1 keys from the SYSTEM hive. I noted these down for later. The values are all 16 bytes long: the ASCII values for 8 hex digits with null bytes between.

This is where the hackery comes in — I loaded the new SYSTEM hive (from the working Windows VM) into a hex editor and searched for the GBG key. The text should appear in a few places — one for the subkey of ControlSet001, one for the subkey of ControlSet002, and so forth. A few bytes after one of the occurrences you should see a sequence of 16 bytes that looks similar to the codes you wrote down: ASCII values for hex digits separated by spaces. Make a note of the original sequence and replace it with the GBG class key value from the working VM. Do the same for the Data, JD and Skew1 values. Near the Data values you should also see the same hex digit sequence without the separating null bytes. Replace that too. Now look at the values in the file near to where the registry key names occur to see if there are any other occurrences of the original hex digit sequences and replace these with the new values as well.

Save the patched SYSTEM registry hive and copy it into the VM being recovered.

Now for the moment of truth: boot the VM. If you've patched all the values correctly then it will boot into Windows. If not, then you'll get the LSASS message again. In this case, try booting into the "Last Known Good Configuration". This might work if you missed one of the occurrences of the original values. If it still doesn't work, load the hive back into your hex editor and have another go.

Step 5: Activate Windows and Install VirtualBox Guest Additions

Once Windows has booted successfully, it will update the SYSKEY entries across the remaining ControlSetXXX entries, so you don't need to worry if you missed some values. You'll need to re-activate Windows XP due to the huge change in hardware, but this should be relatively painless — if you enable a network adapter in the VM configuration then Windows can access the internet through your host's connection seamlessly. Once that's done you can proceed with install the VirtualBox guest additions to make it easier to work with the VM — mouse pointer integration, sensible screen resolutions, shared folders and so forth.

Was it quicker than installing everything from scratch? Possibly: I had a lot of software installed. It was certainly a lot more touch-and-go, and it was a bit scary patching the registry hive in a hex editor. It was quite fun though, and it felt good to get it working.

Posted by Anthony Williams

[/ general /] permanent link

Tags: virtualbox

Stumble It! ![]() | Submit to Reddit

| Submit to Reddit ![]() | Submit to DZone

| Submit to DZone ![]()

If you liked this post, why not subscribe to the RSS feed ![]() or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

or Follow me on Twitter? You can also subscribe to this blog by email using the form on the left.

Design and Content Copyright © 2005-2024 Just Software Solutions Ltd. All rights reserved. | Privacy Policy